ROS-I Consortium Americas Hosts Training Event in Seattle, Washington

/The ROS-Industrial Americas consortium hosted its second training event of 2018 in Seattle on July 17-19, attended by 15 students from companies across various industries. The three-day event, hosted by Levi Armstrong and Michael Ripperger from Southwest Research Institute, featured a basic and advanced track where participants were able to explore ROS-related topics from ROS architecture and communication to motion planning, perception, and code testing.

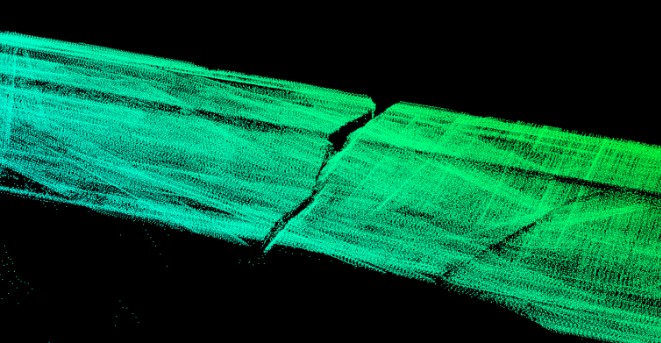

New to this particular training session was the inclusion of a Python node within the Perception Pipeline to enable the understanding of C++ and Python node interaction. Additional content around RVIZ GUI creation and debugging tools were also featured. To explore these new modules and the rest of the training content, check out the ROS-I training wiki here.

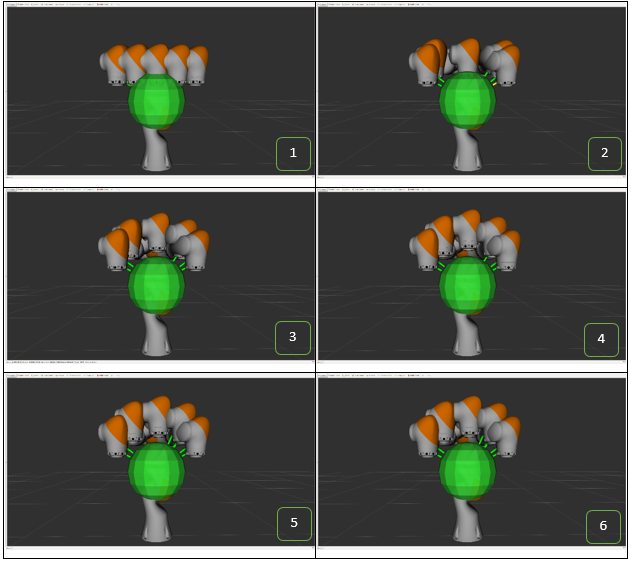

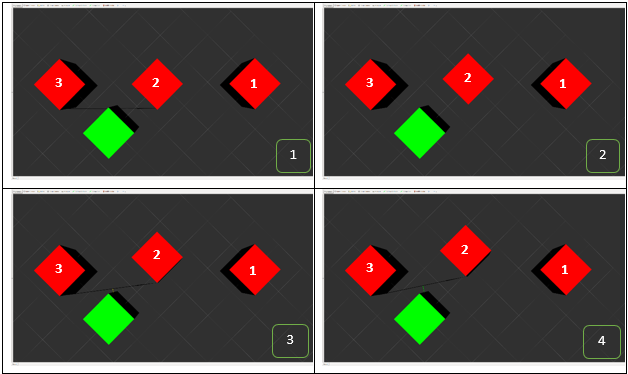

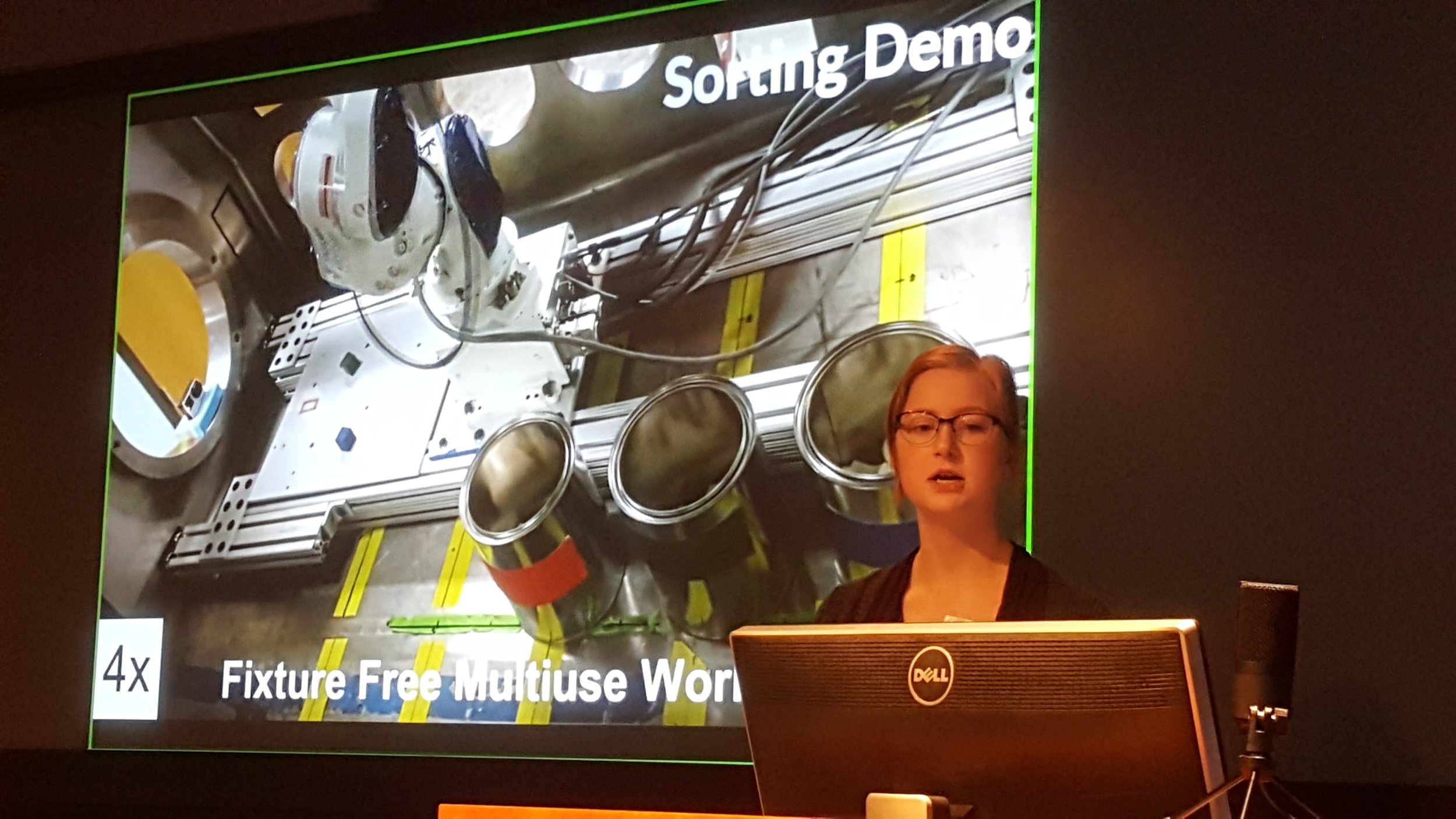

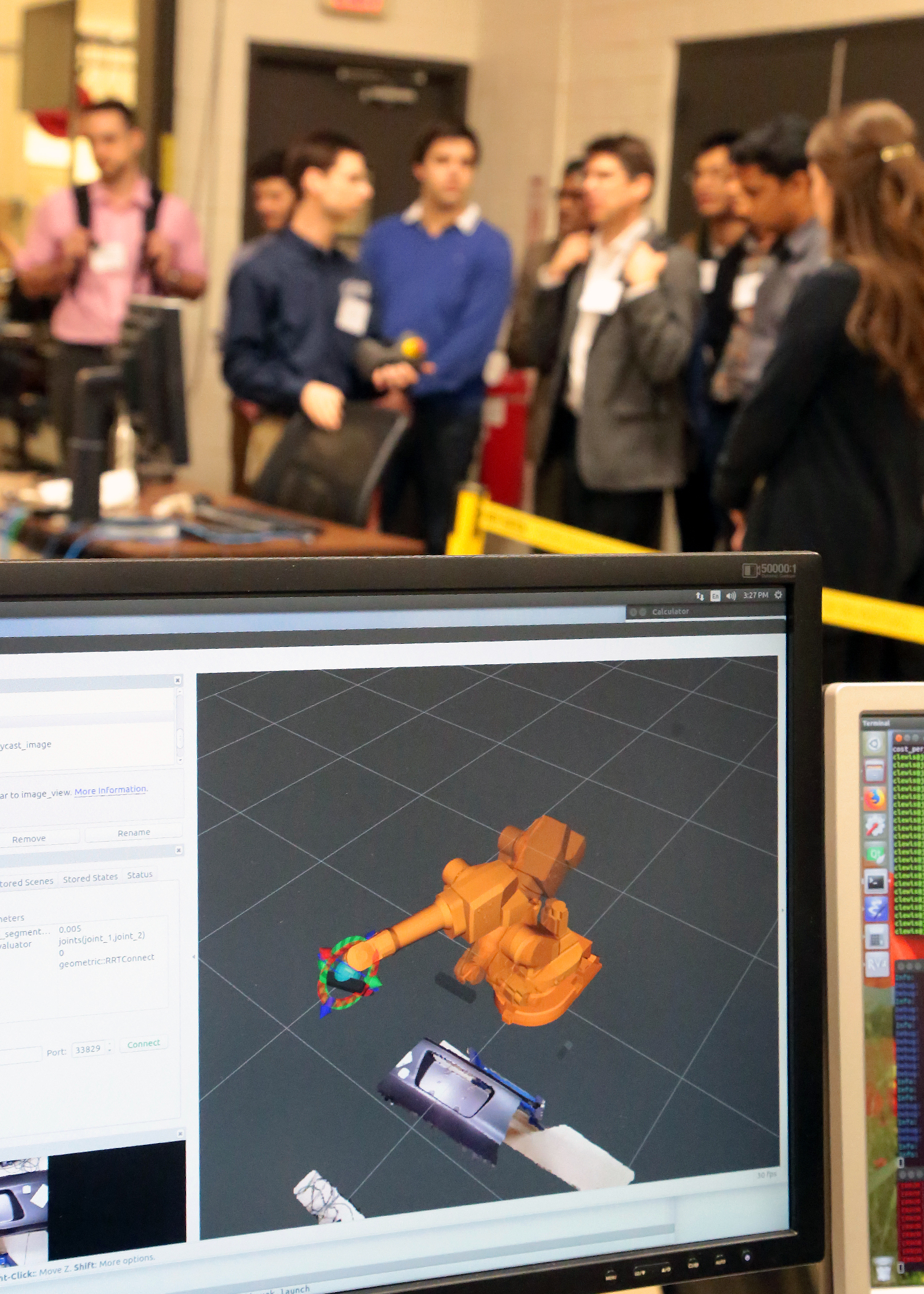

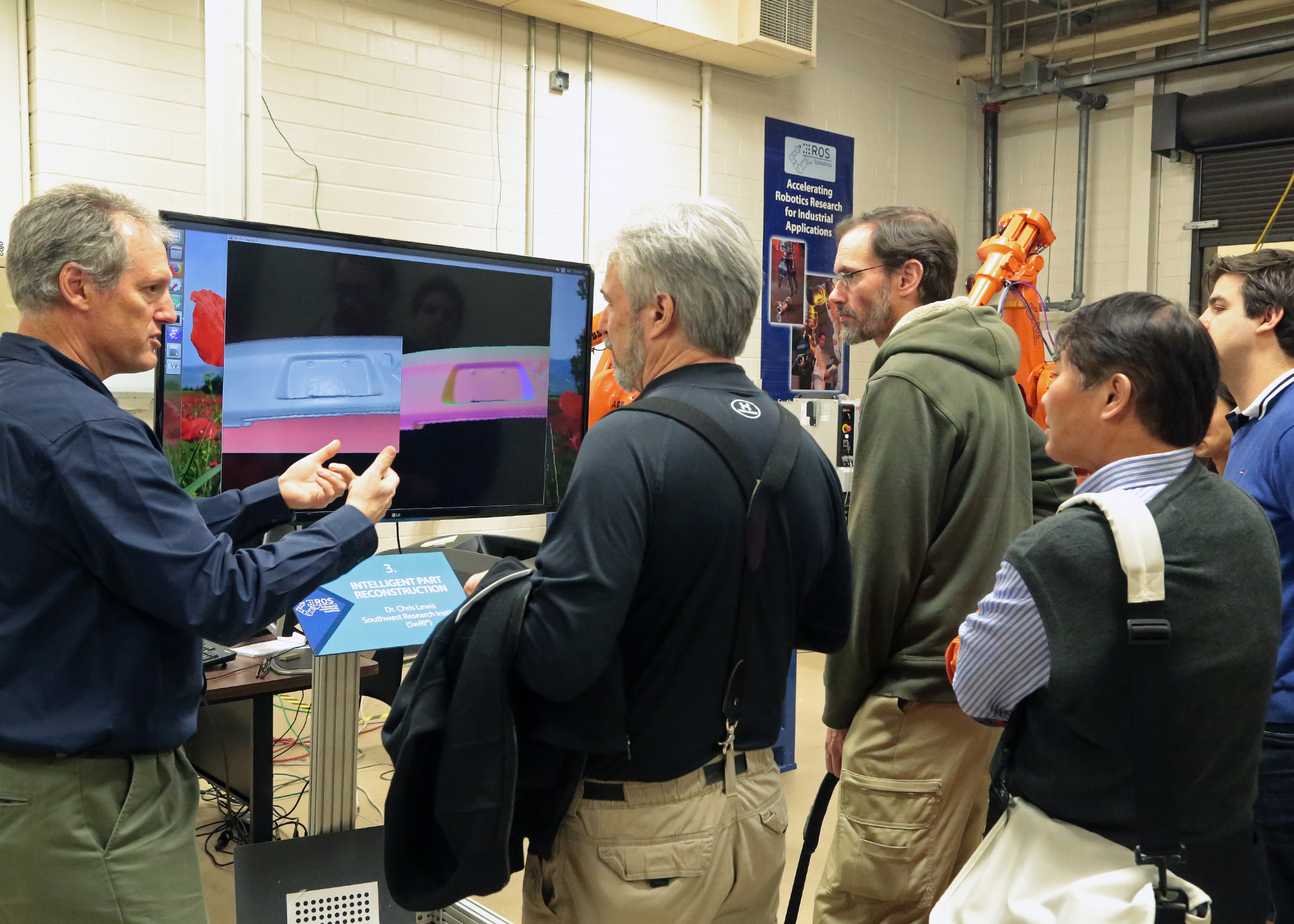

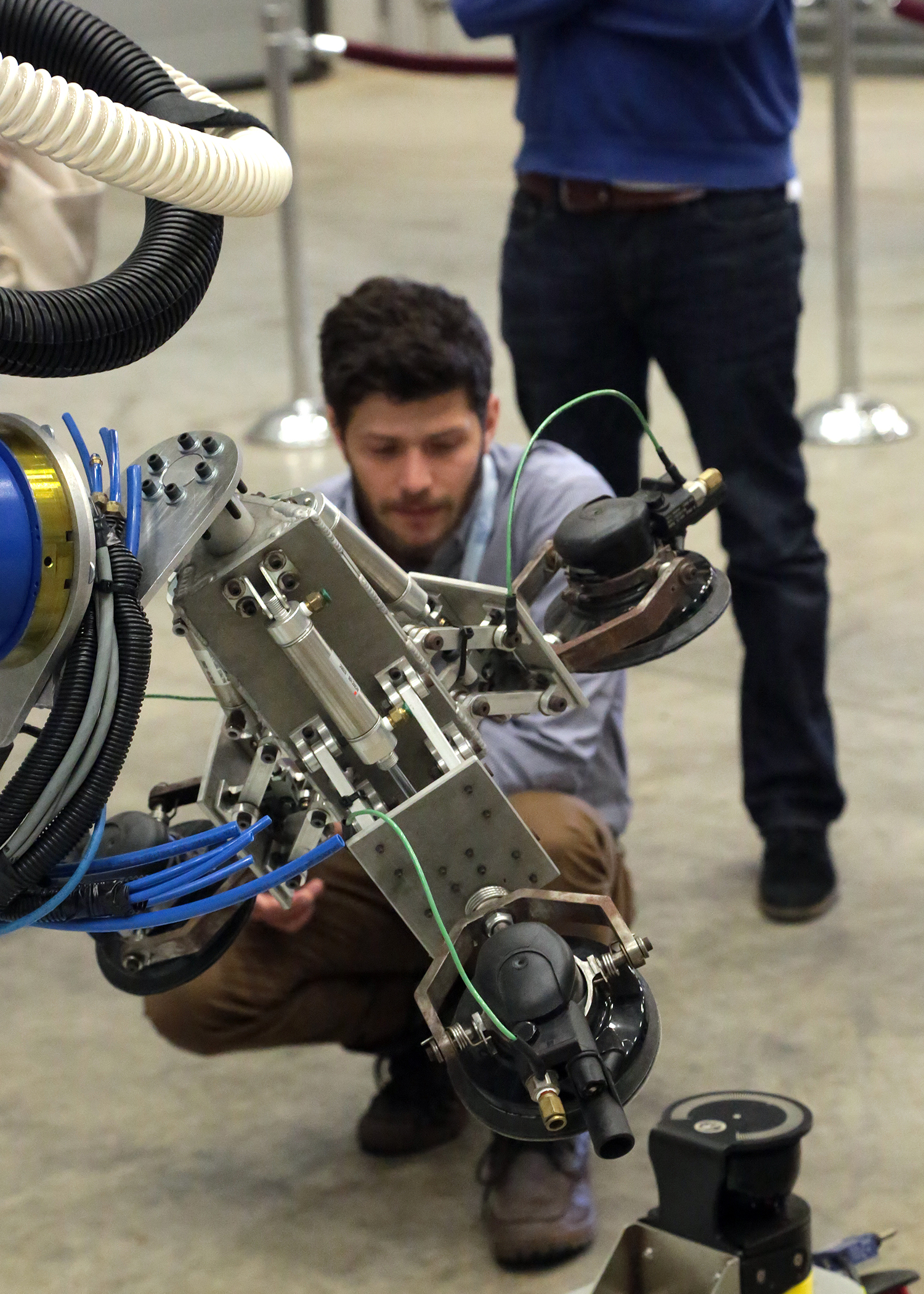

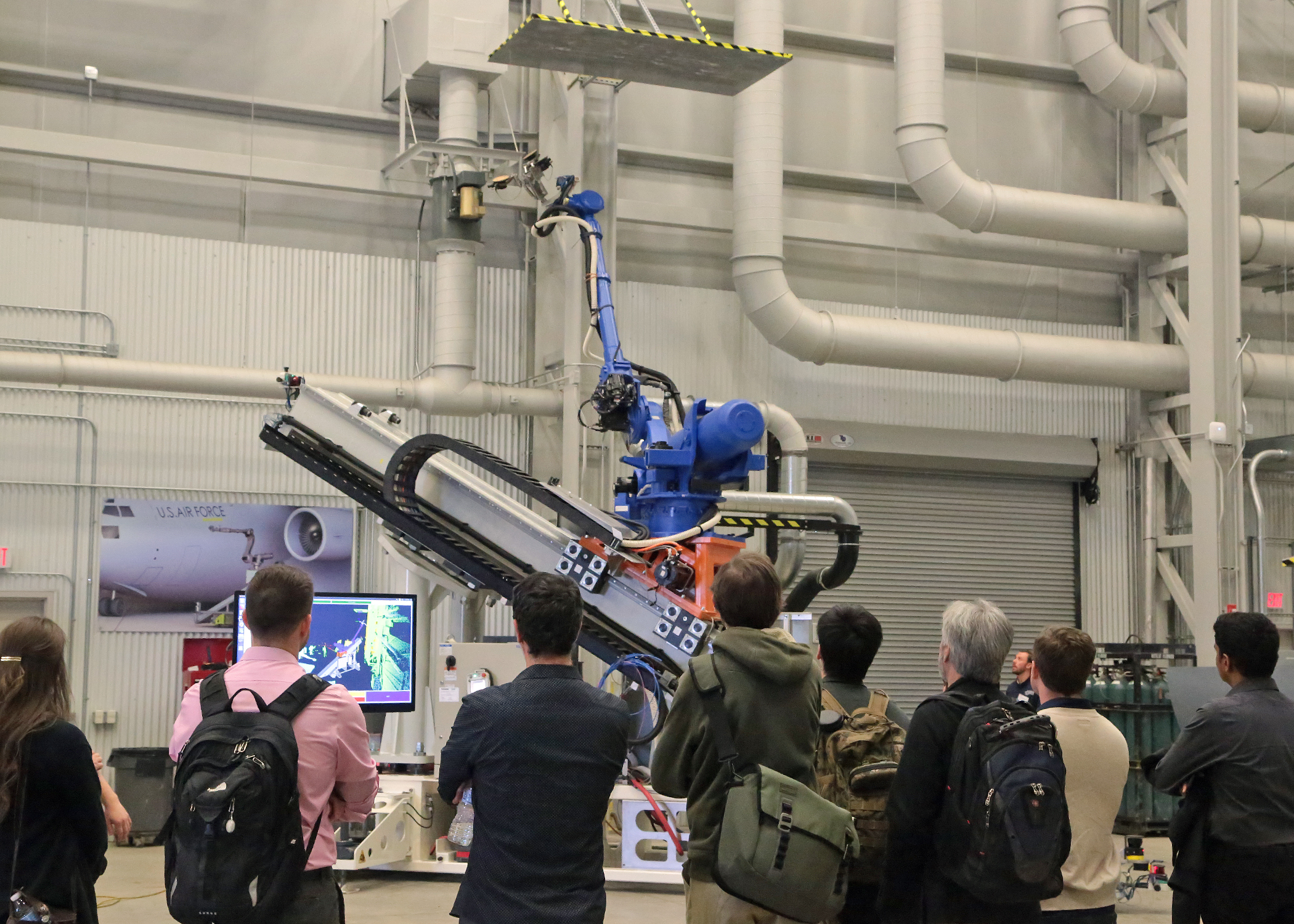

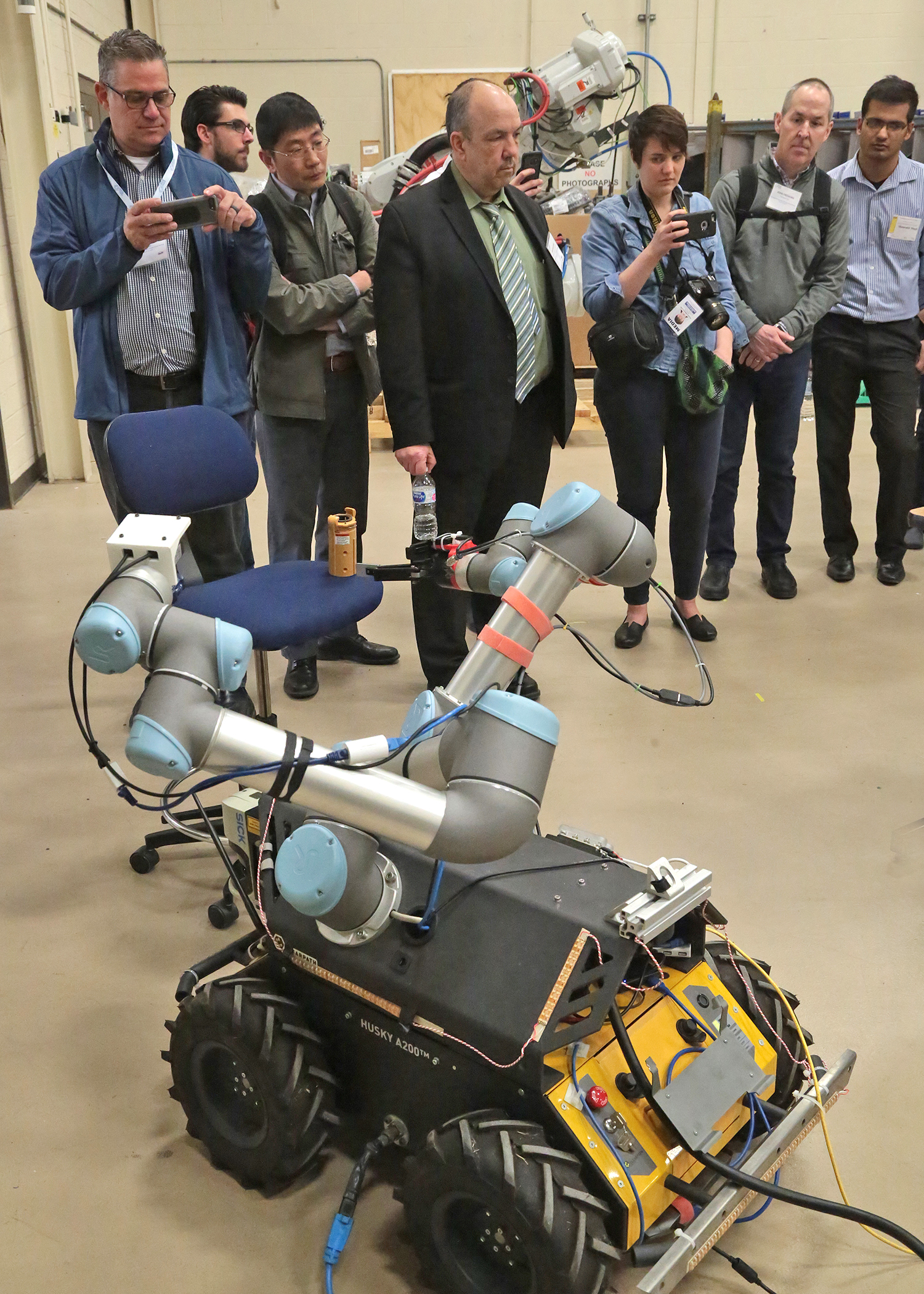

The training event was a combination of lecture and hands-on coding and hardware demos. At the end of the event, students were able to interact with provided UR5 robots and test the code they created on the robots. Overall the training was a great opportunity to learn more about ROS and network with ROS-I Consortium members, or their partners, in the robotics field leveraging ROS in their own applications.

Thank you to our hosts in the Emerald City and to all of our attendees for making this class a great success.

A third ROS training event for this year is currently in the works for the fall, so stay tuned for more details! Also, don't forget, full Consortium members are able to host ROS-I Consortium Americas training events, such as this event in Seattle. As always, do not hesitate to offer feedback relative to how training can be improved to meet your needs. We are always interested in member and community feedback!