Unlocking for Industrial Users the Power of ROS 2: ROS-I October Training Highlights

/The ROS-Industrial Consortium Americas provided a hybrid, online/in-person, ROS 2 training October 22-24. The three-day ROS-I developers training class was given to trainees from across the ROS-Industrial Consortium membership.

Whether you are just starting to use or are already experienced in ROS 2, the training offered something for all levels. Newcomers learned the ropes, getting a solid foundation in the framework’s core concepts, while experienced developers delved into advanced topics such as advanced motion planning, including tuning optimization contsraint parameters.

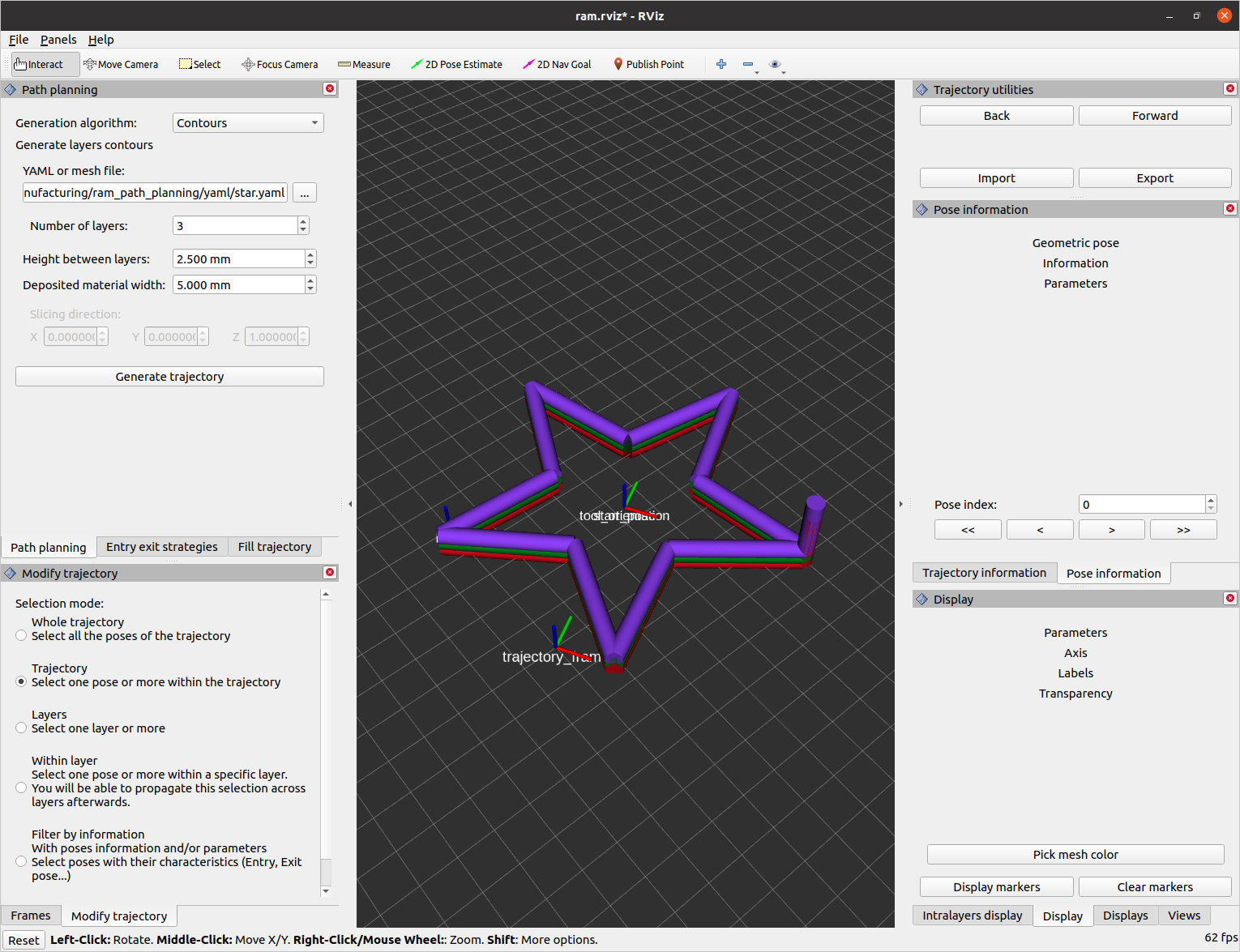

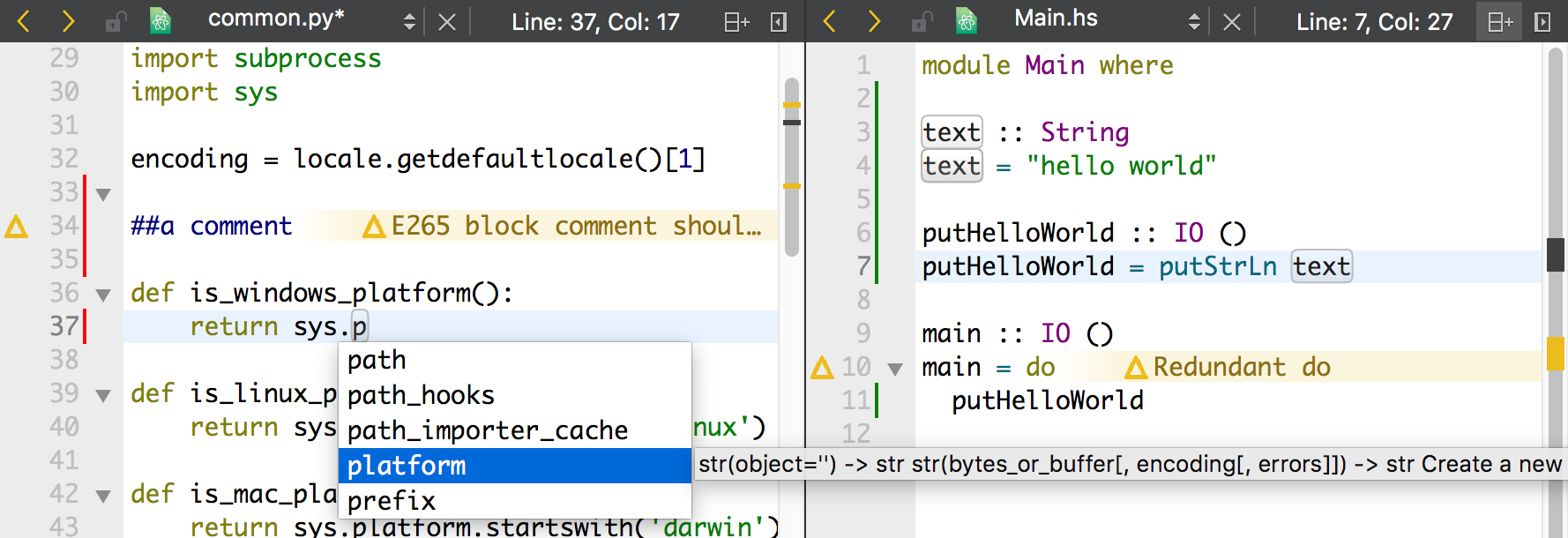

Currently training is on ROS 2 Humble, delivered through an AWS EC2 instance. On the first day, the attendees were divided into two groups: beginner ROS developers and advanced ROS developers. The advanced group learned how to set up a basic motion planning pipeline in Tesseract, where they refined a planning pipeline increasing robustness. By the end of the day students were adding unique customizations to the pipeline.

The beginner group focused on learning the fundamentals of ROS 2, including workspace structure and best practices for adding scripts and building the workspace. They also learned about creating packages / nodes, topics (publishers / subscribers), messages, services, actions, launch files and command line parameters.

Day 2, groups gathered to learn how to develop URDF/XACROs to describe a robot, as well as how to use TFs and create a MoveIt package for an industrial robot for motion planning in a simulation environment.

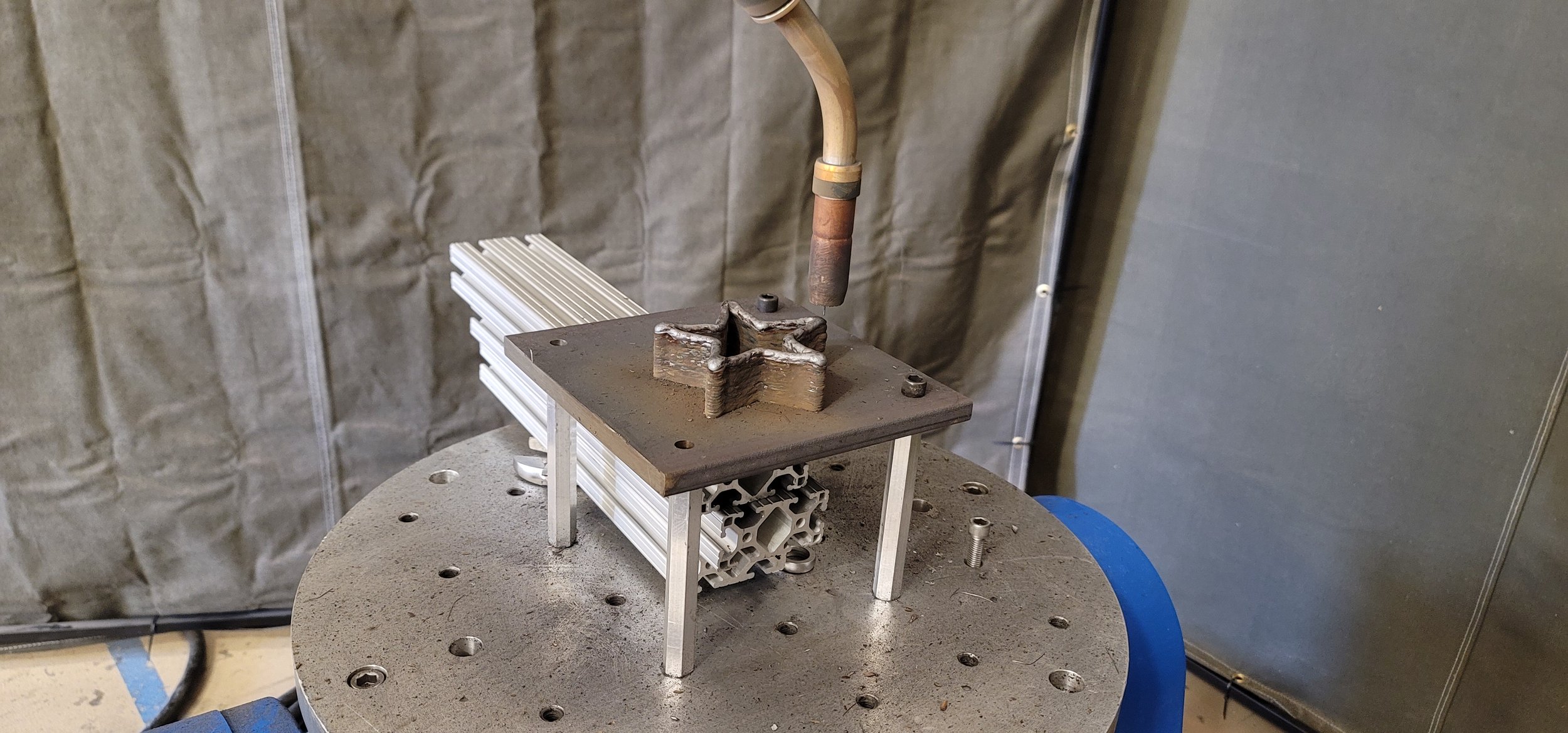

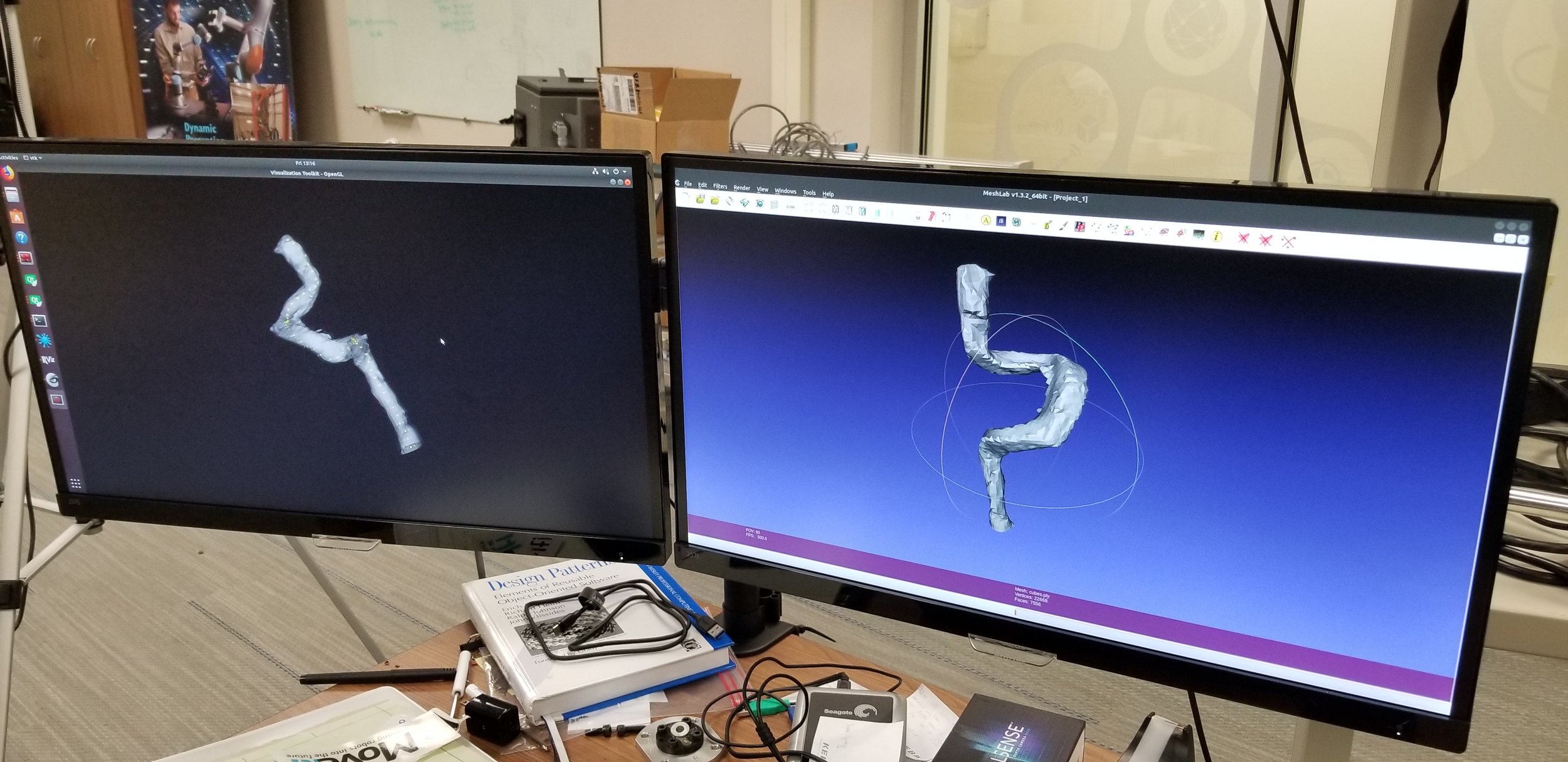

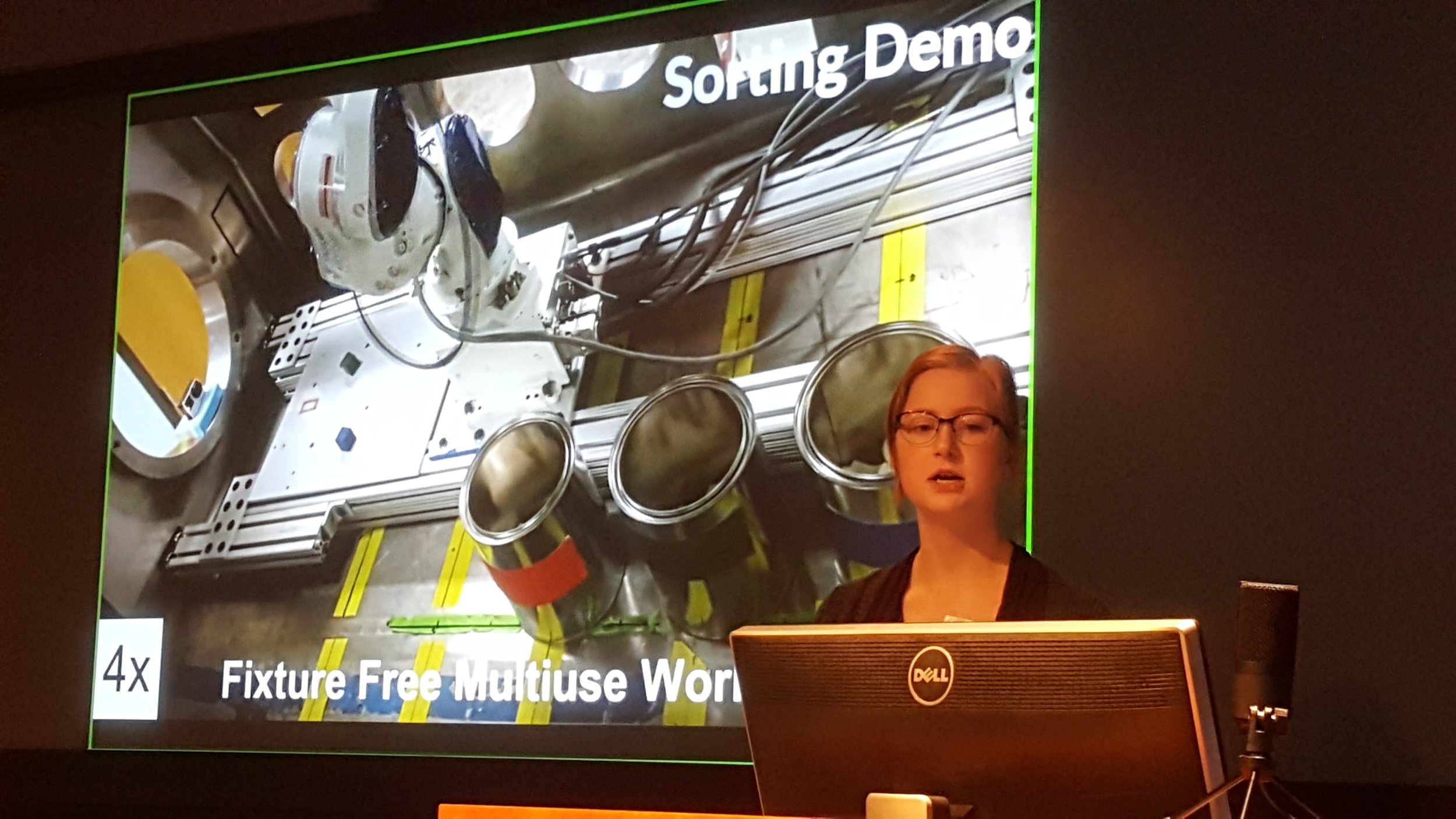

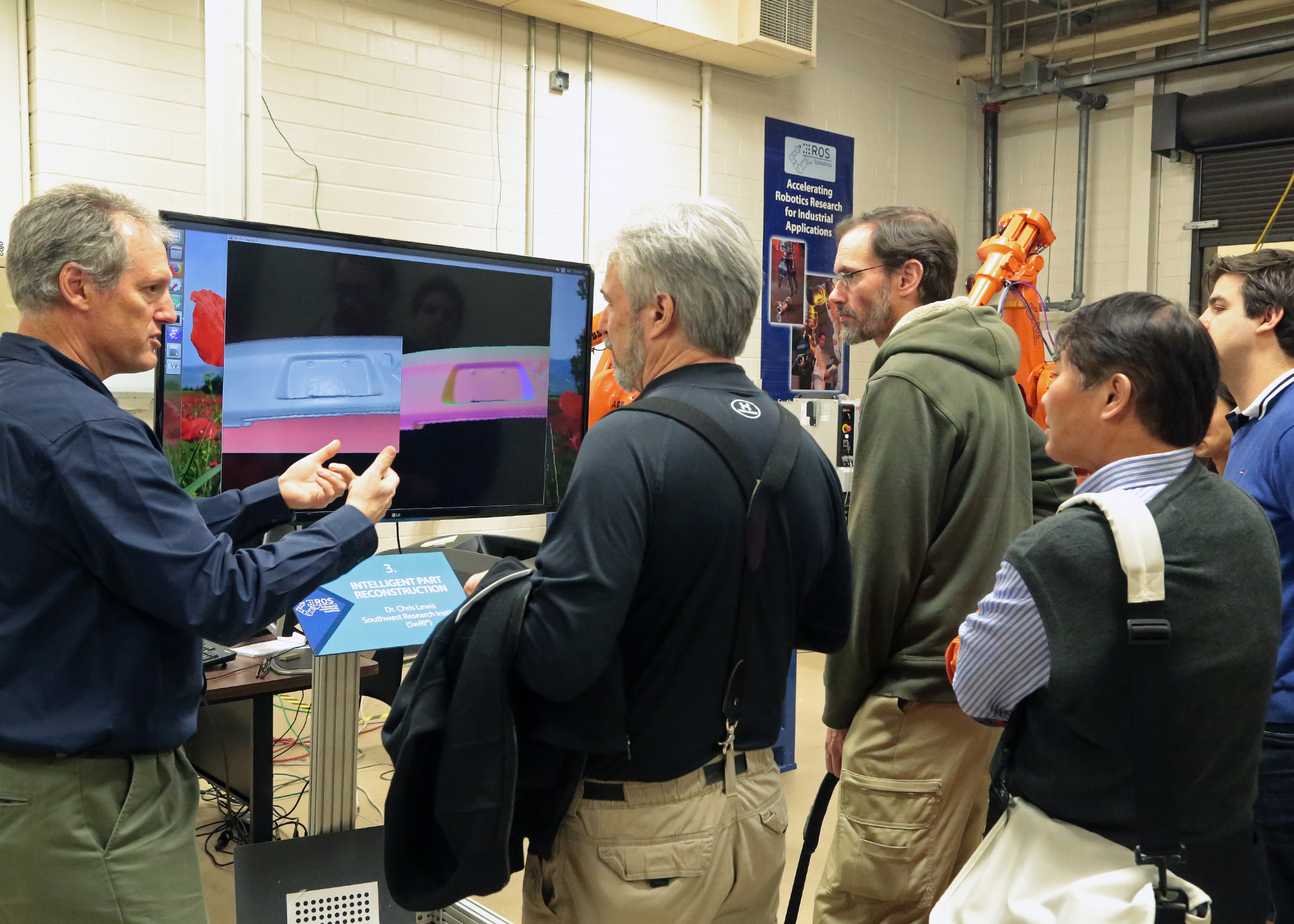

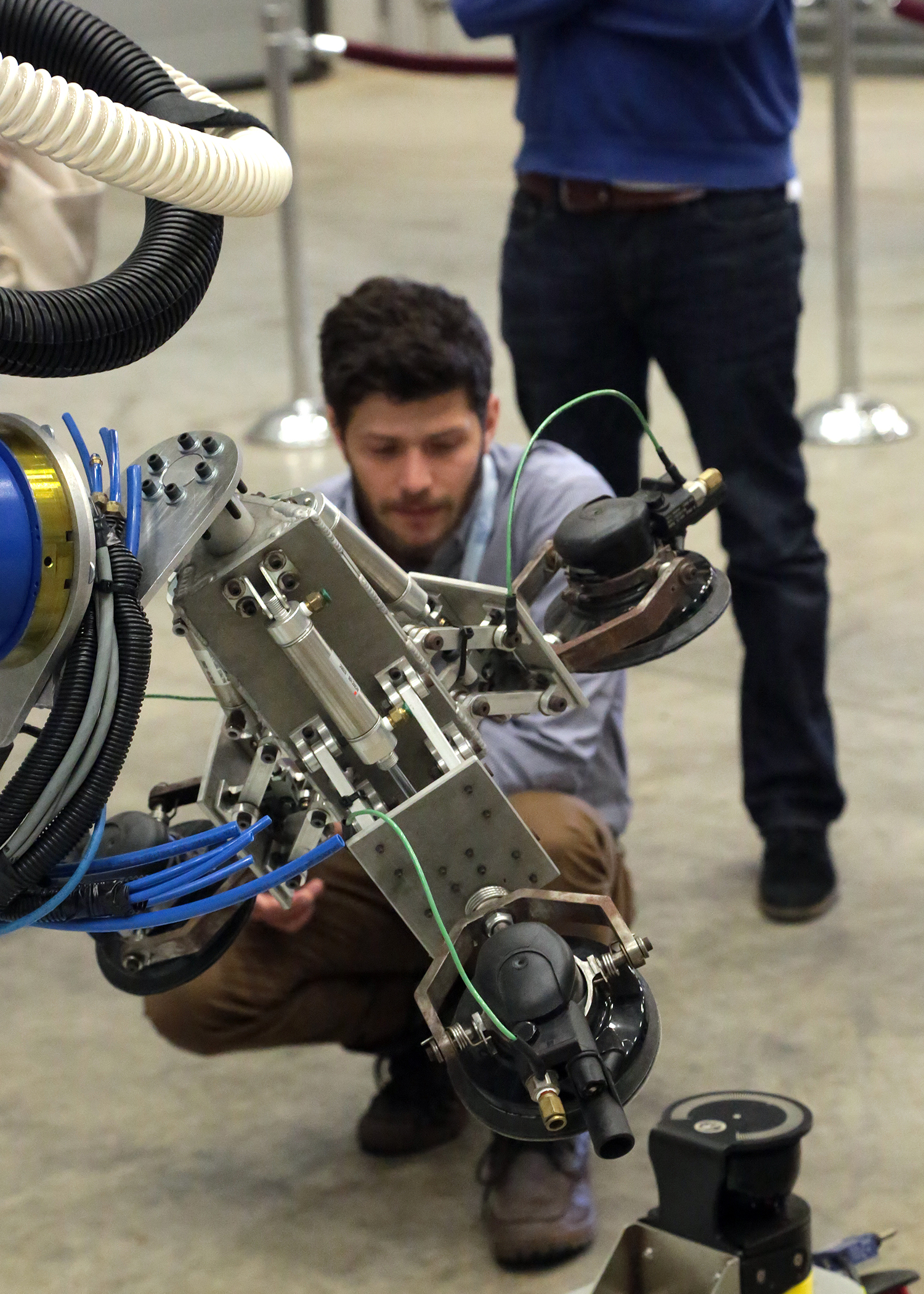

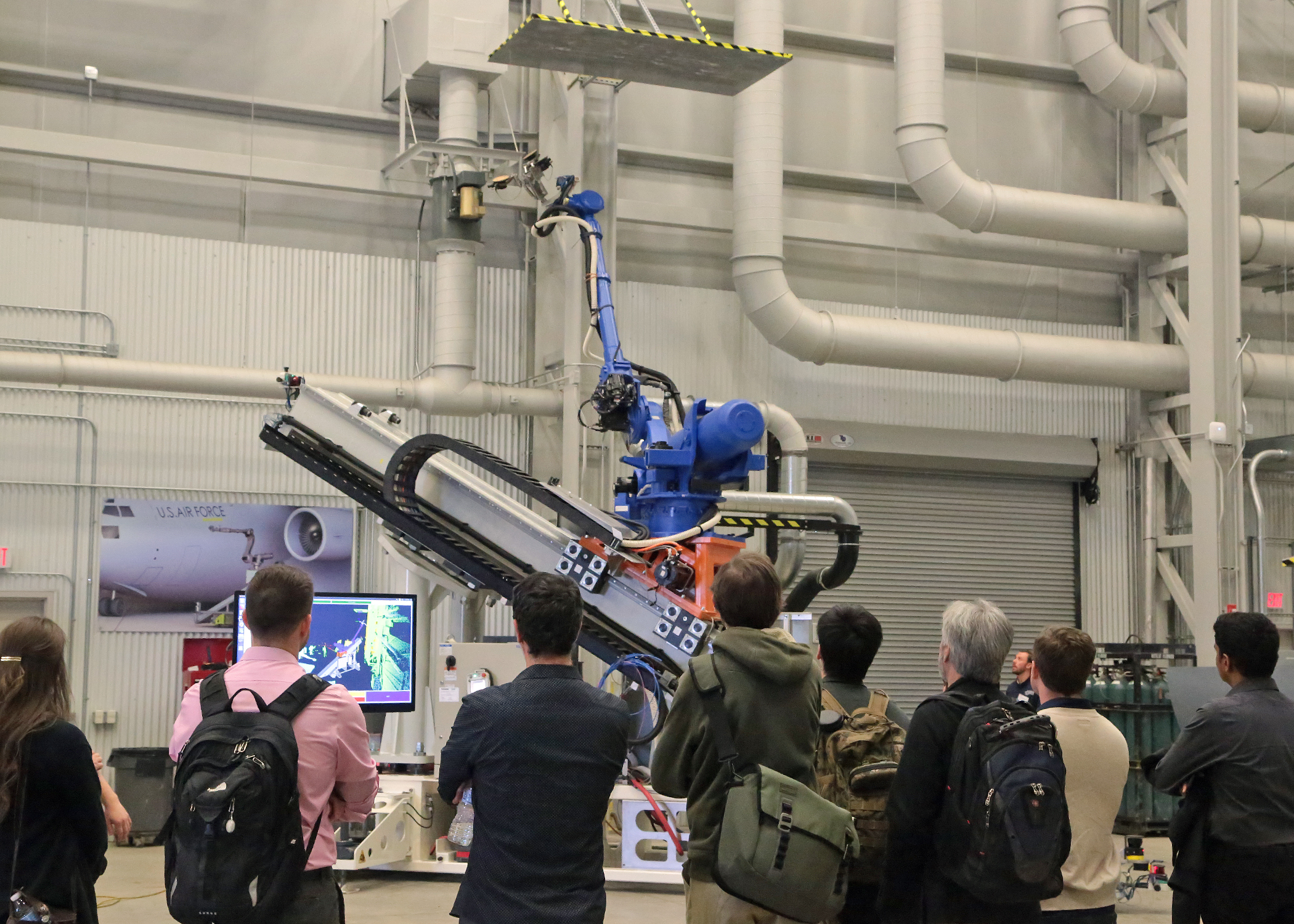

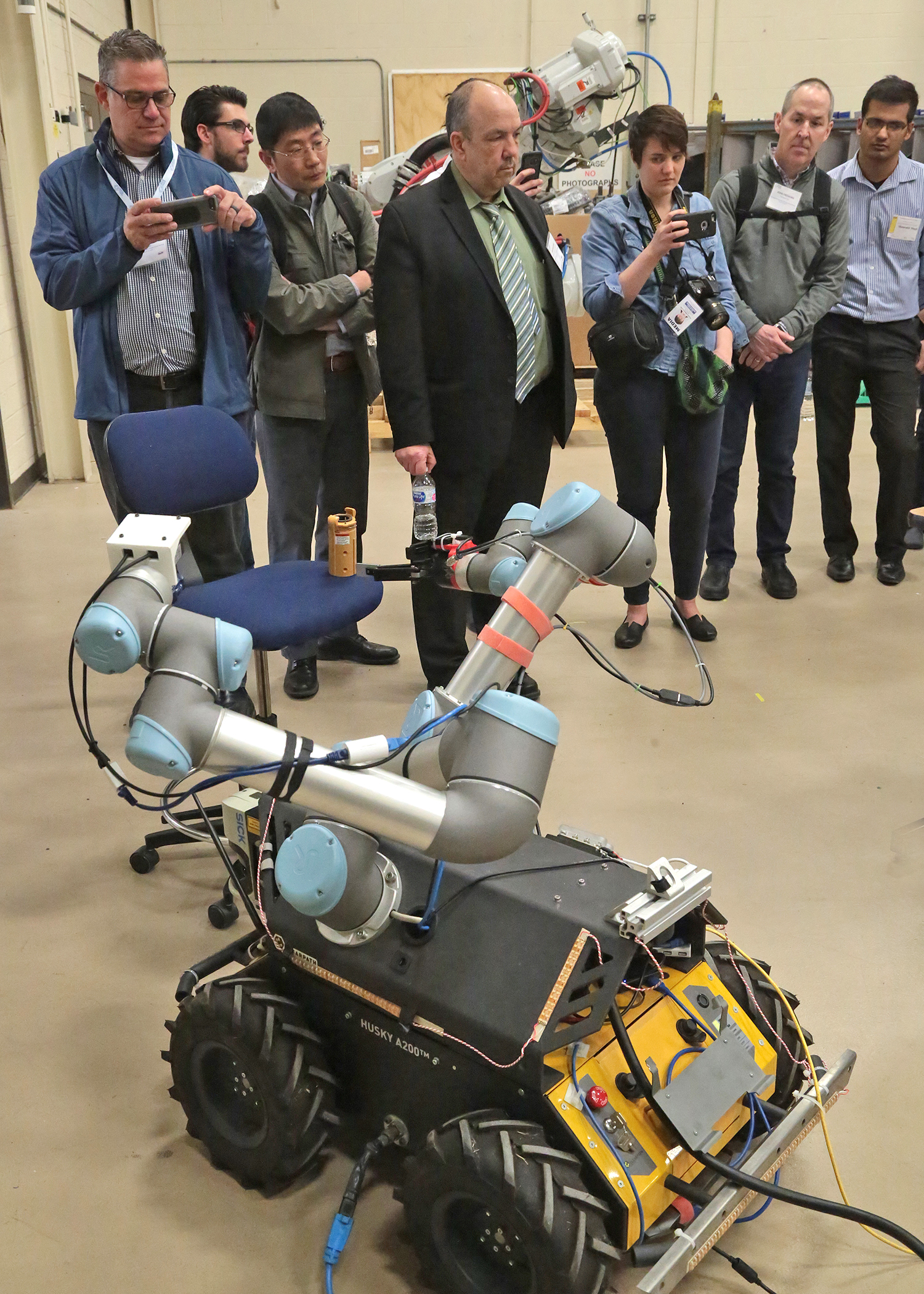

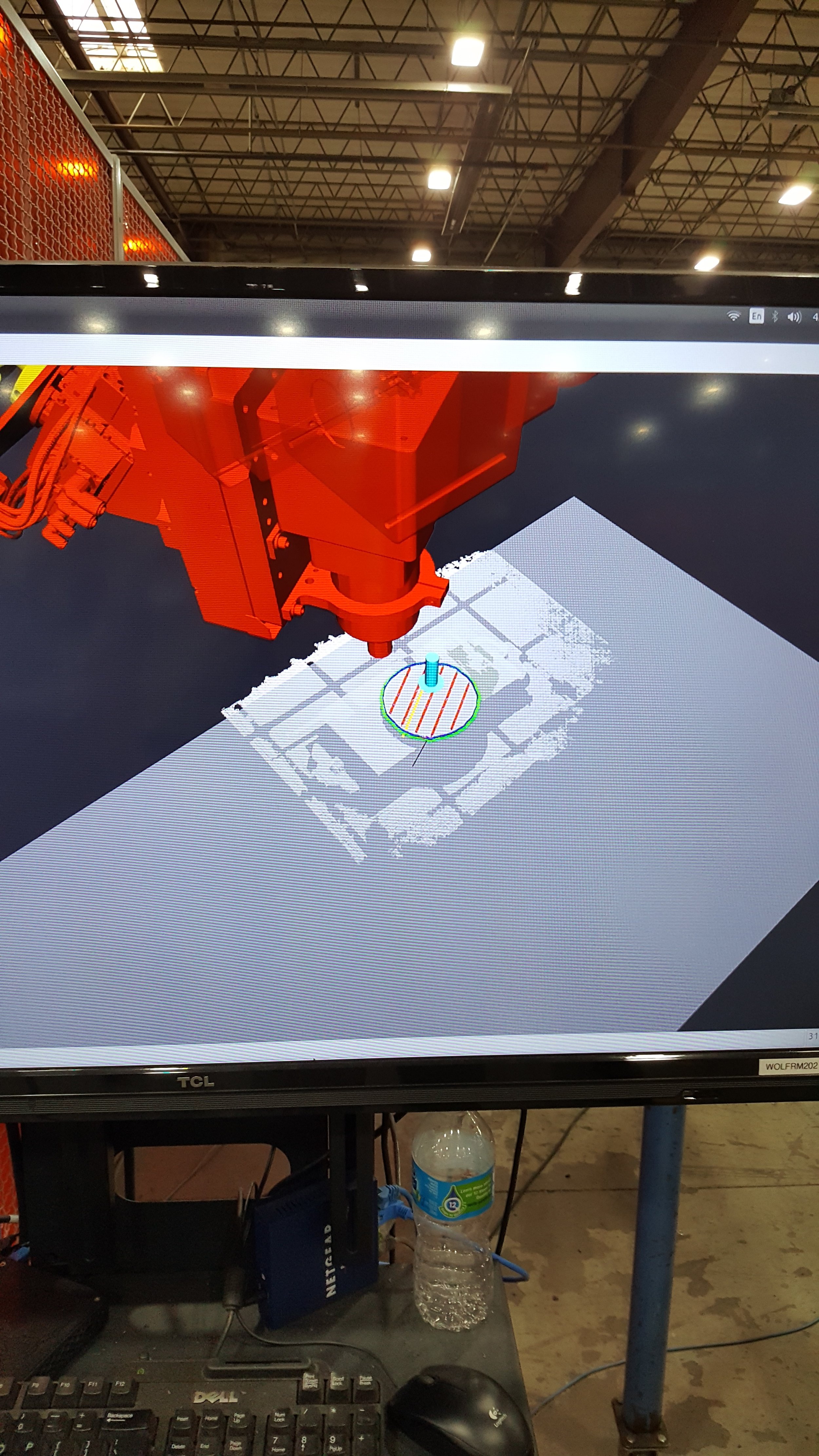

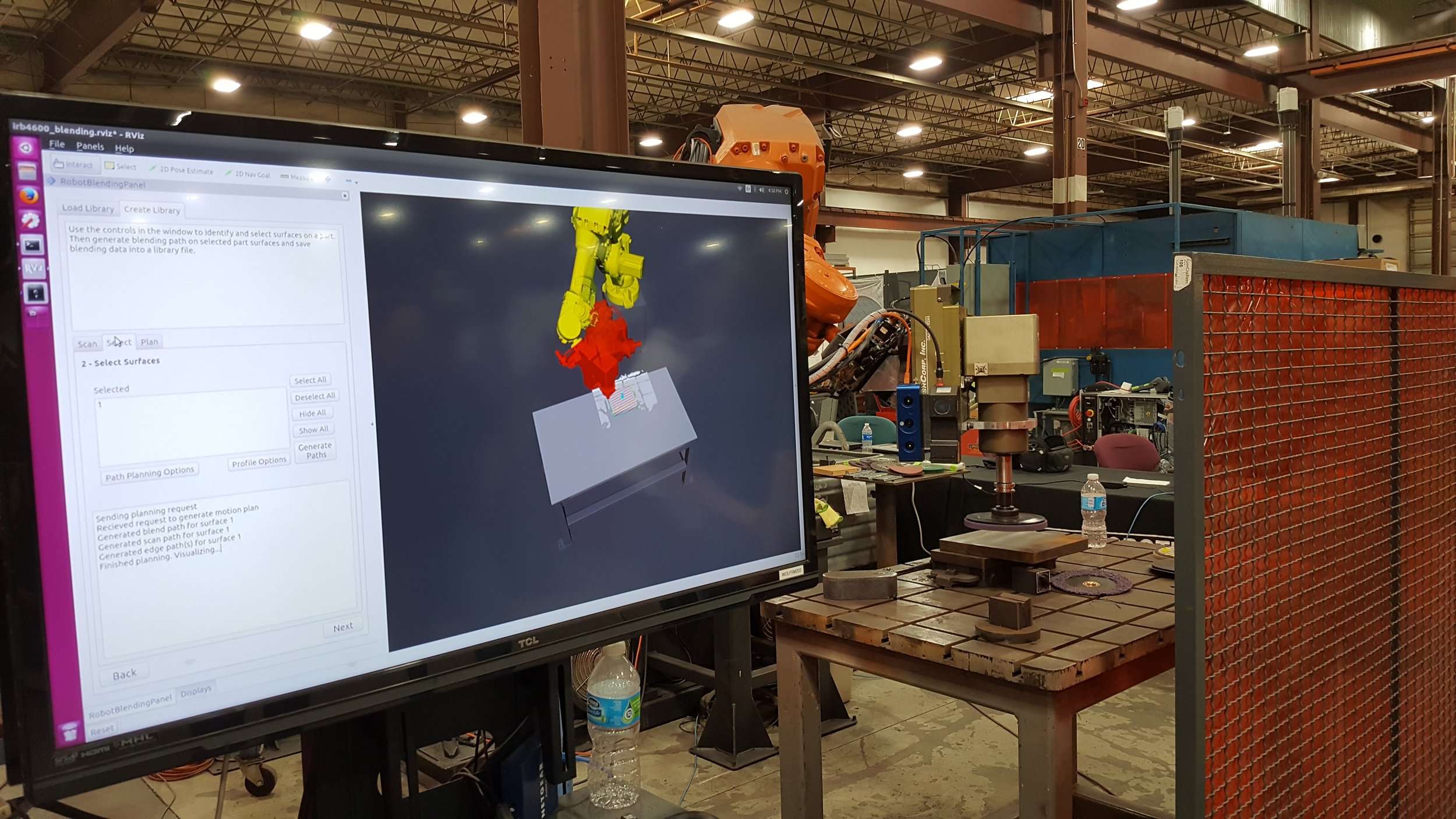

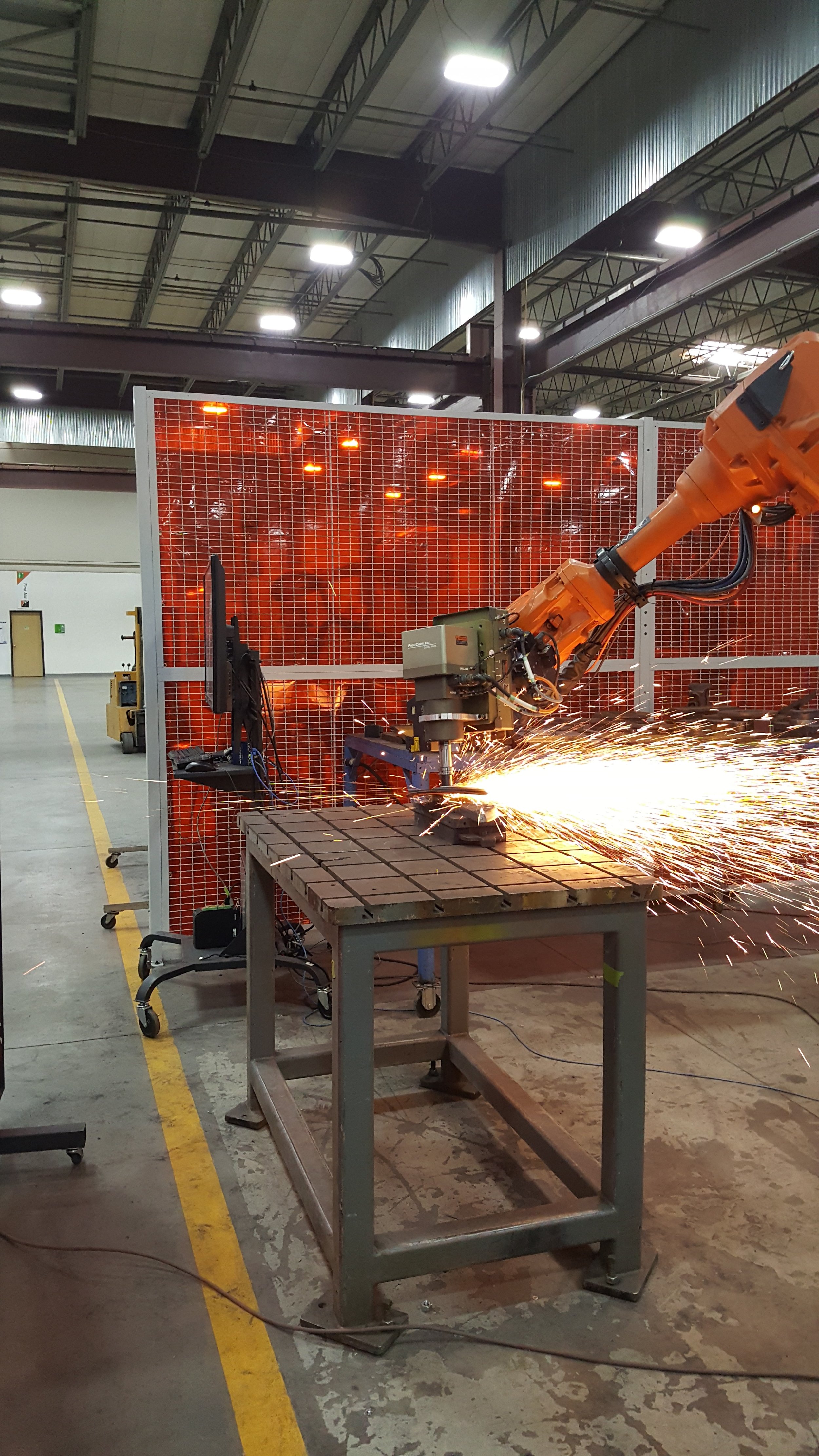

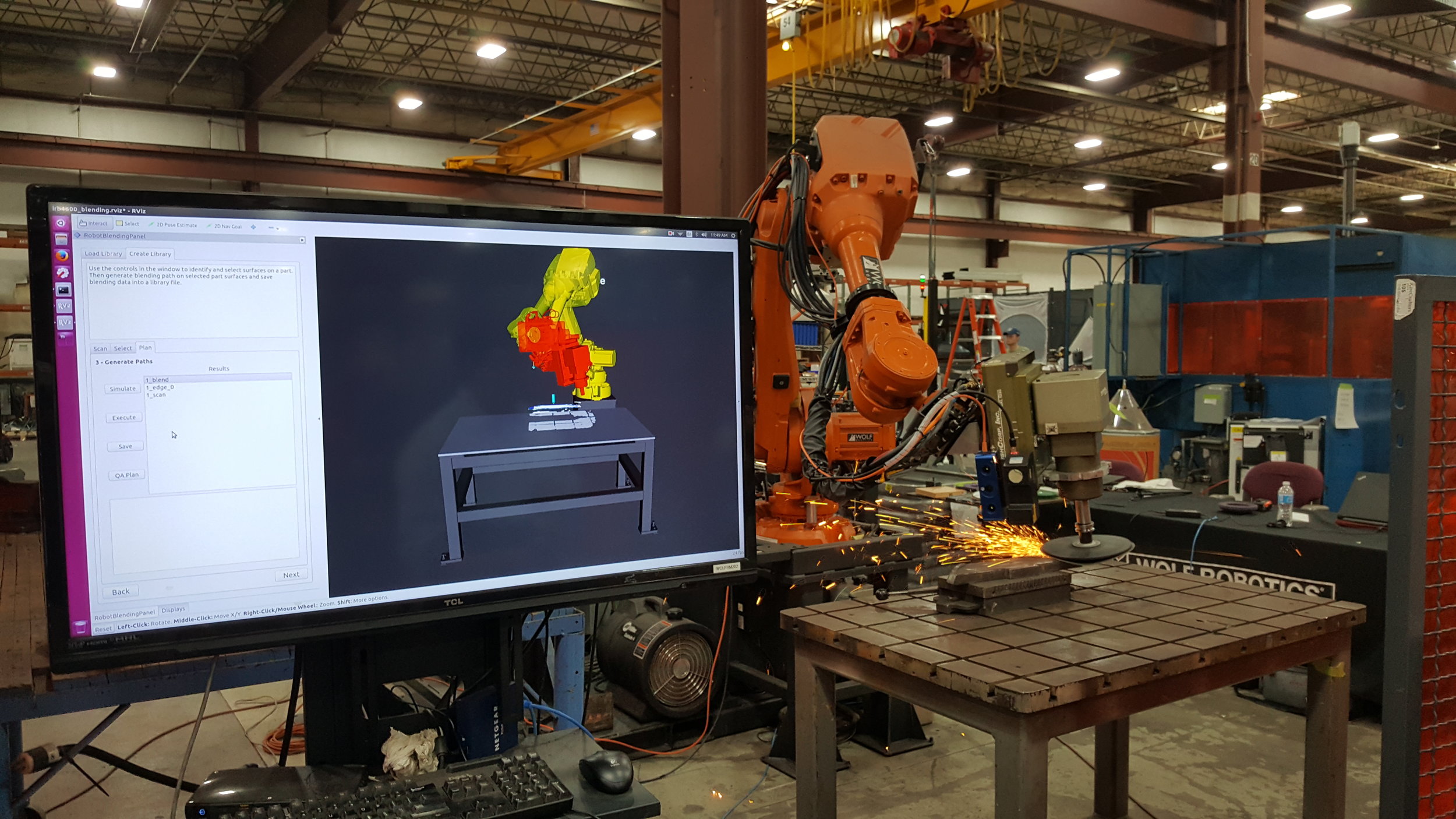

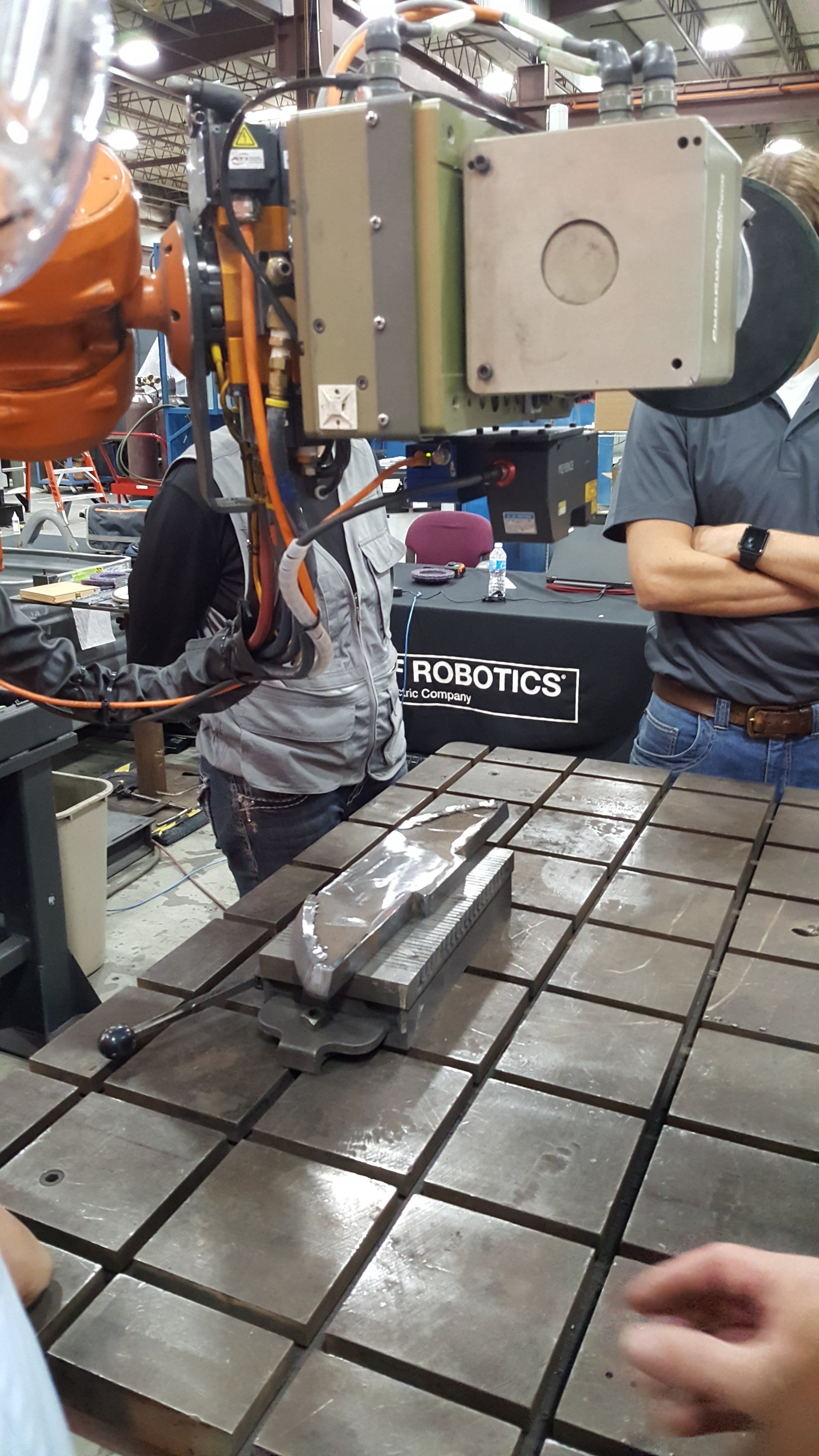

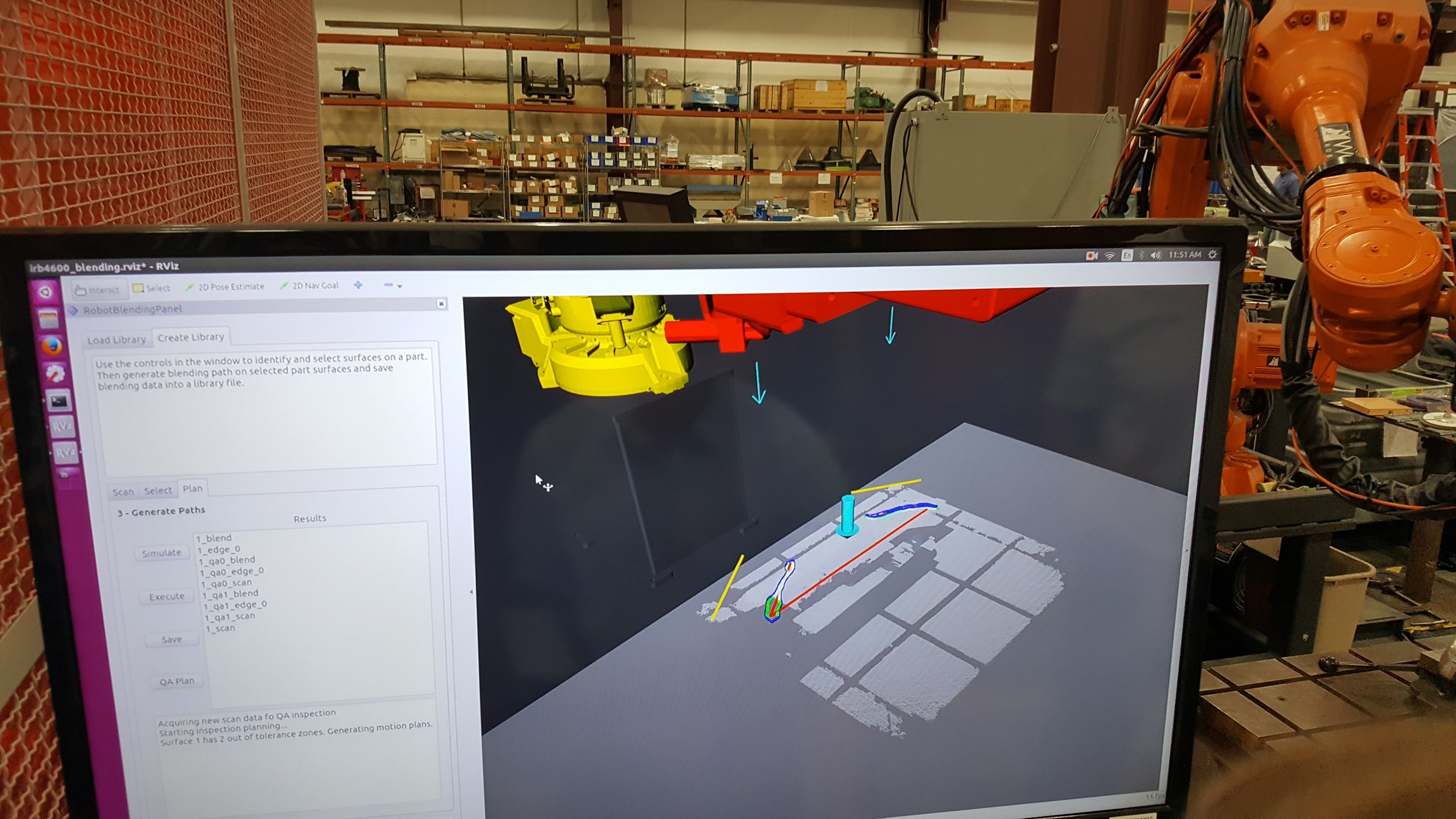

Day 3 started with a tour that focused on ROS 2 robotic systems within the Robotics Department at SwRI, where the instructors were able to give insights on the capabilities of ROS 2 in more tangible working industrial examples.

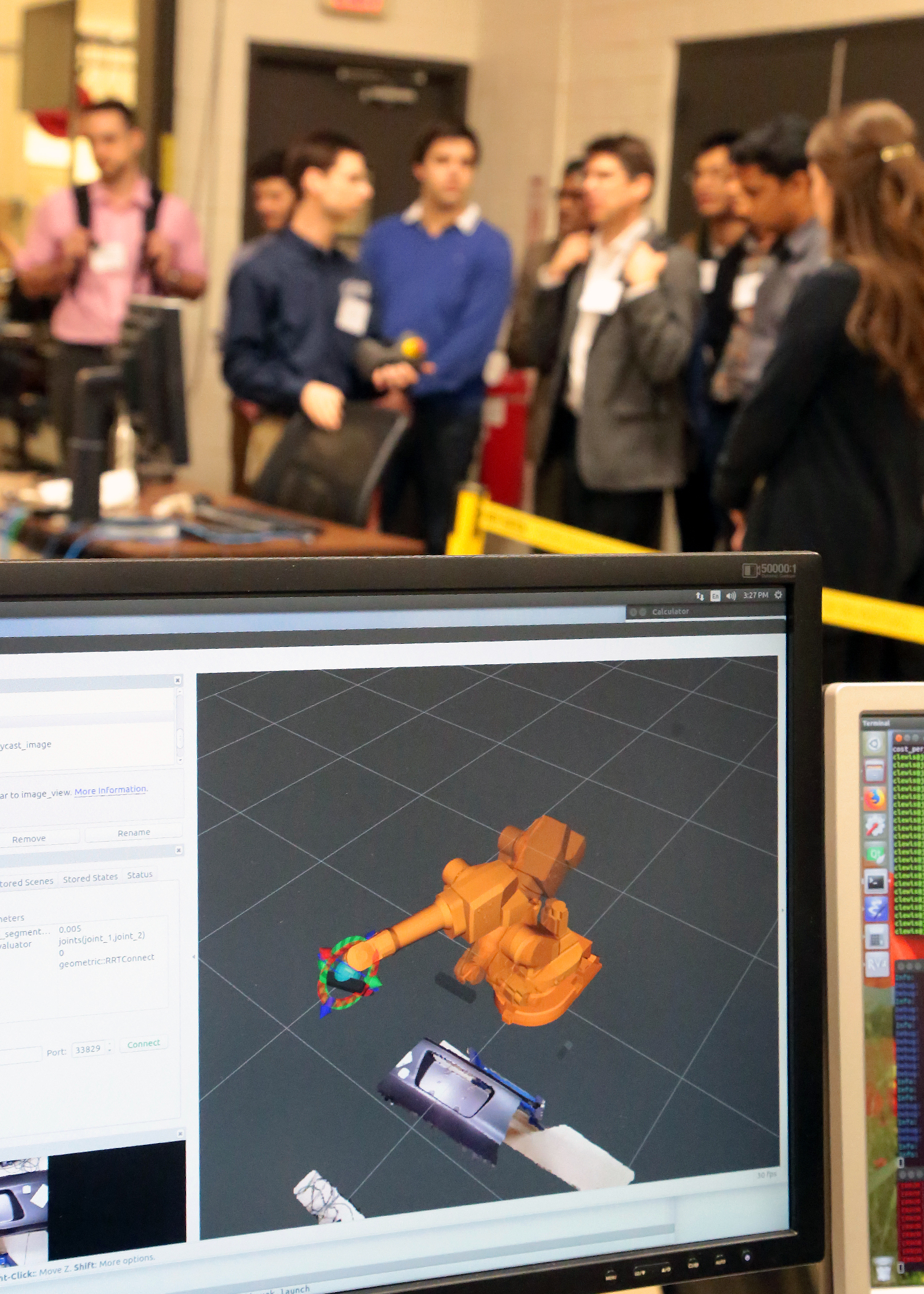

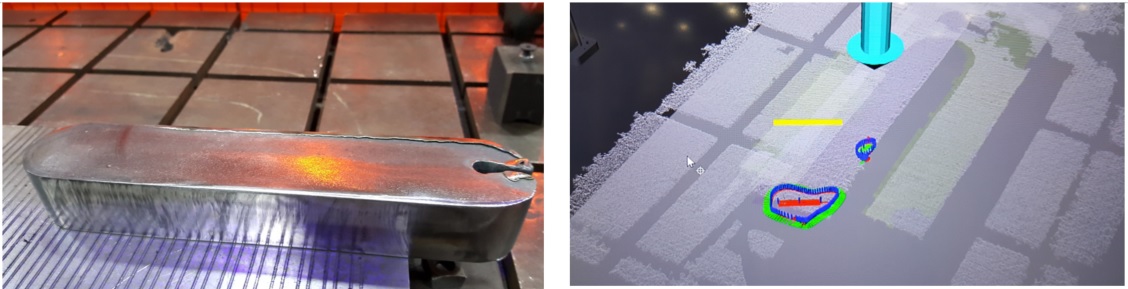

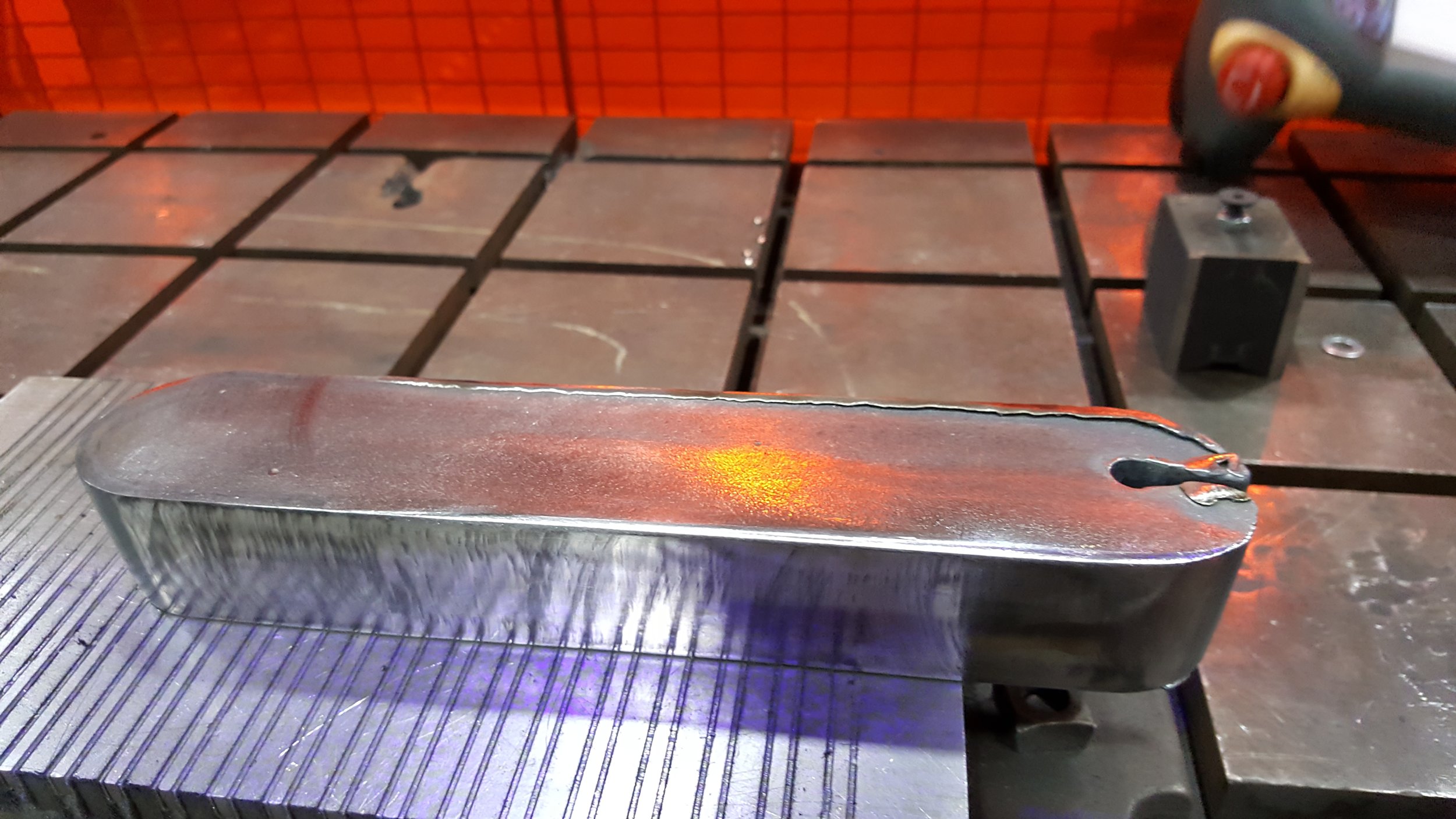

In person attendees stepping through the scan-n-plan workshop demonstration

Wrapping up the training participants were given the opportunity to share with instructors and the group how they intended to use ROS in their projects and where already under way the instructors were able to provide additional assistance to address issues in their application development back home.

Hybrid training always presents challenges in making sure those online get the same attention as those in the room. However, it was rewarding to assist attendees in getting deeper into ROS 2, whether they were just starting out or wanting to expand their solution set or add features to an ongoing project. As always, as instructors, we always look forward to seeing how they apply these new skills and always look forward to interacting through the repositories or various ROS/ROS-I collaborative events.

If you are interested in an upcoming training class, they are regularly posted at https://rosindustrial.org/events-summary.