Teaching an Old Robot New Tricks

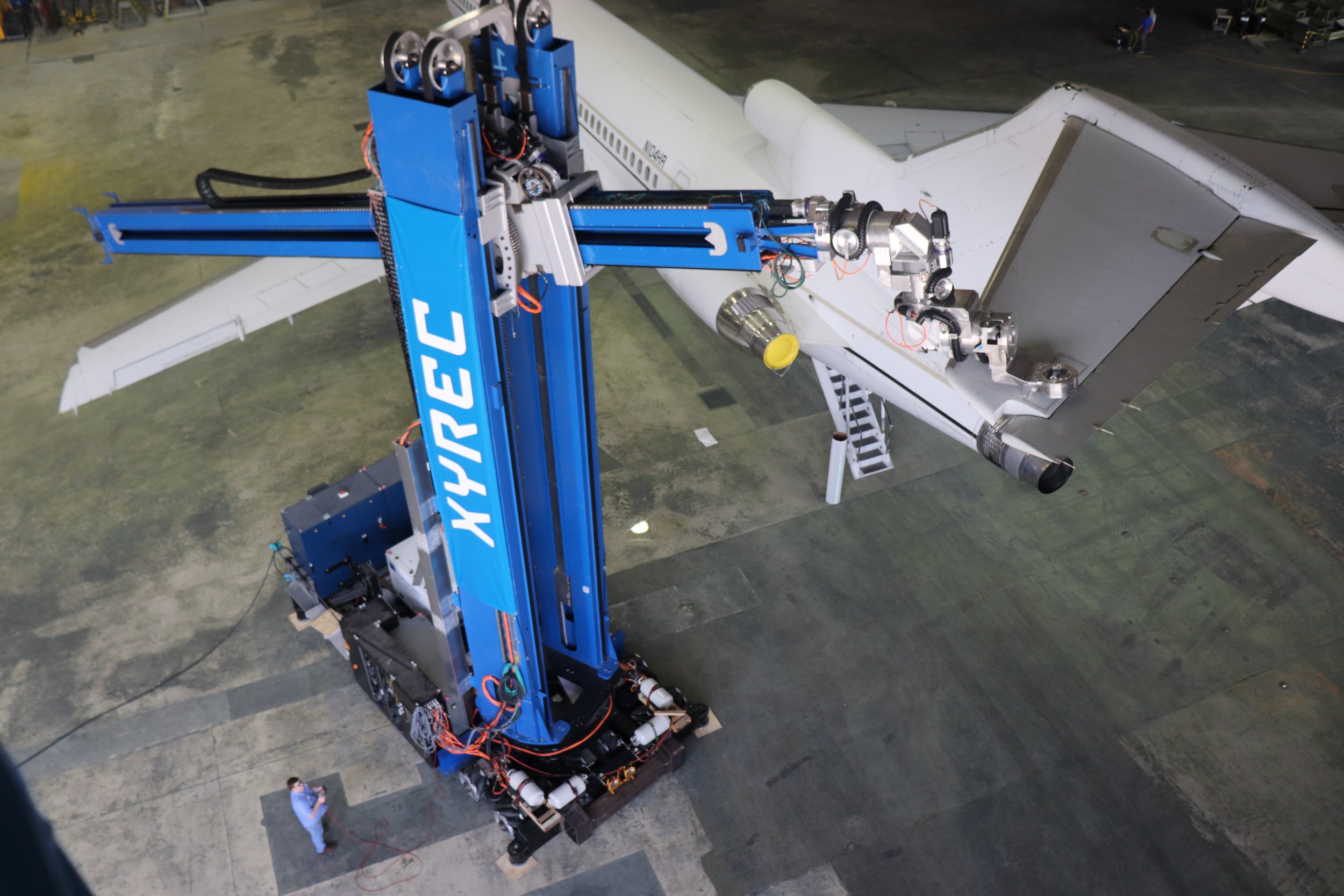

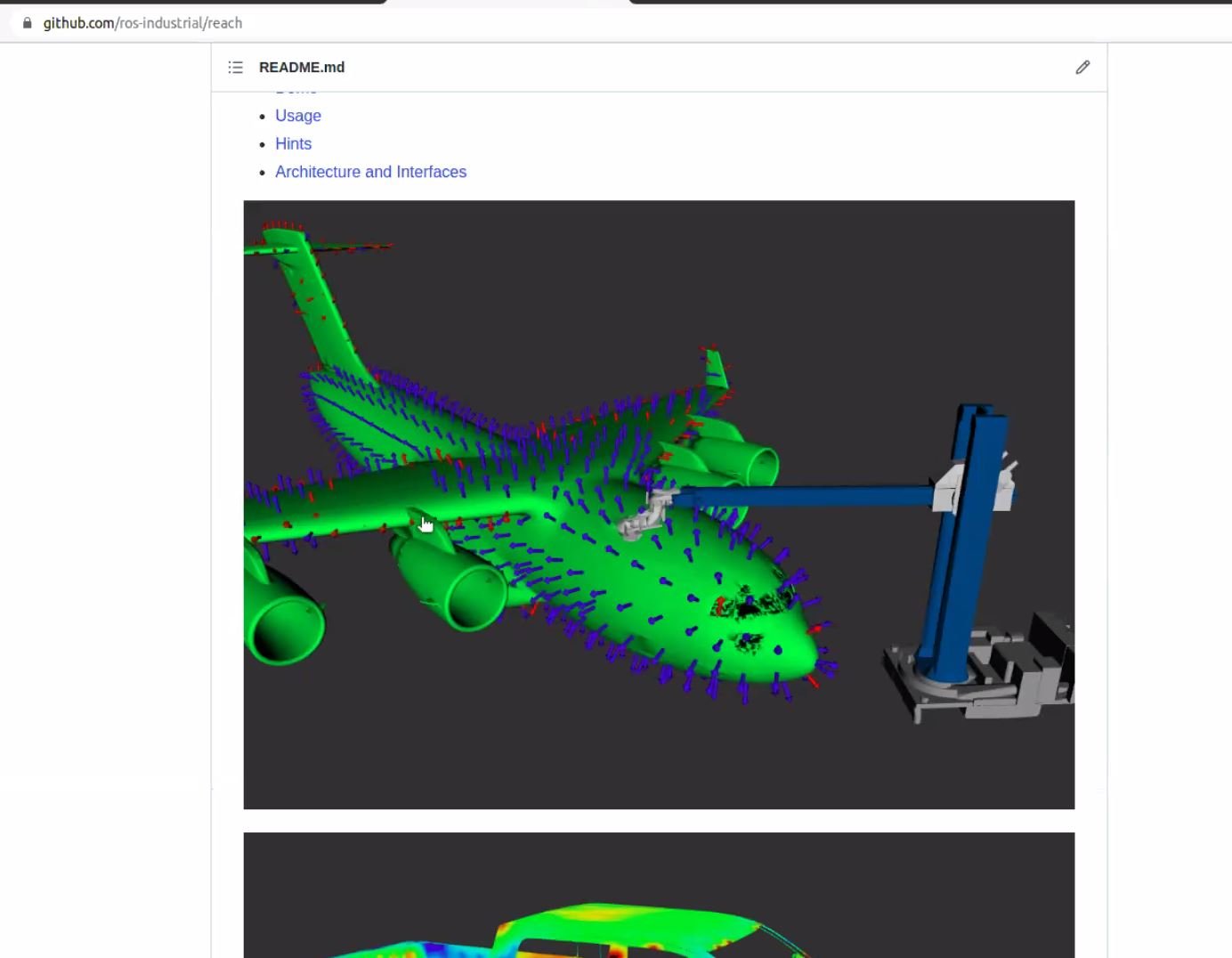

/Robotics is increasingly present in our daily lives in one way or another. Although many hear the word 'Robotics' think of humanoid-type robots or even robotic arms used in industry, the reality is that robotics has many forms and applications, from autonomous mobile robots (AMR) to standard industrial robots. Robots range in size from as small of the palm of your hand, to robots capable of reaching the top of an airplane.

Xyrec laser coating removal robot (https://www.swri.org/industry/industrial-robotics-automation/blog/laser-coating-removal-robot-earns-2020-rd-100-award)

GAPTER (http://wiki.gaitech.hk/gapter/index.html)

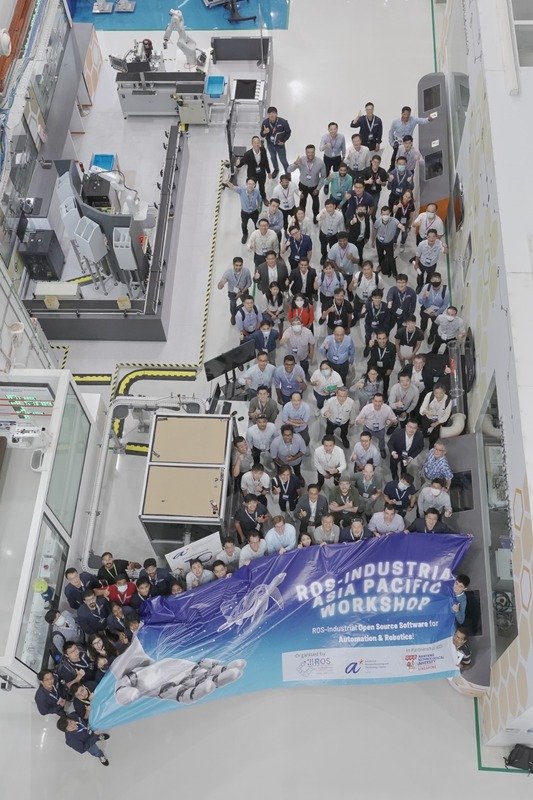

Robots, and in particular industrial robots, are programmed to perform certain functions. The Robot Operating System (ROS) is a very popular framework that facilitates the asynchronous coordination between a robot and other drives and/or devices, and has been a go-to means to enable the development of advanced capability across the robotics sector.

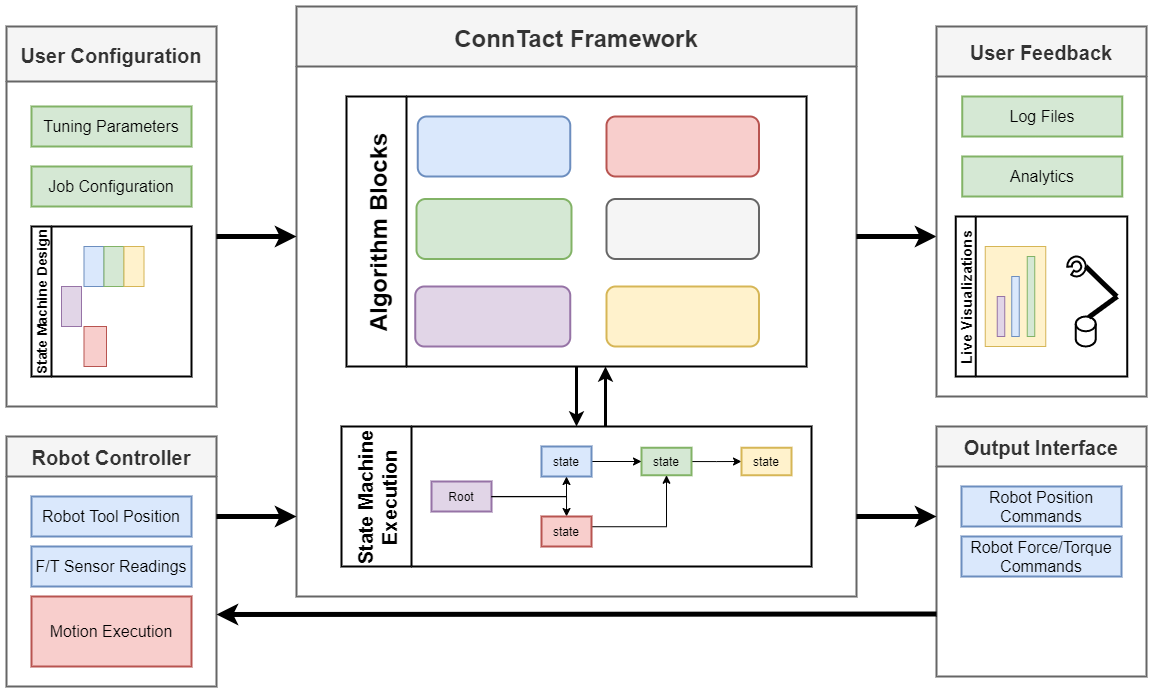

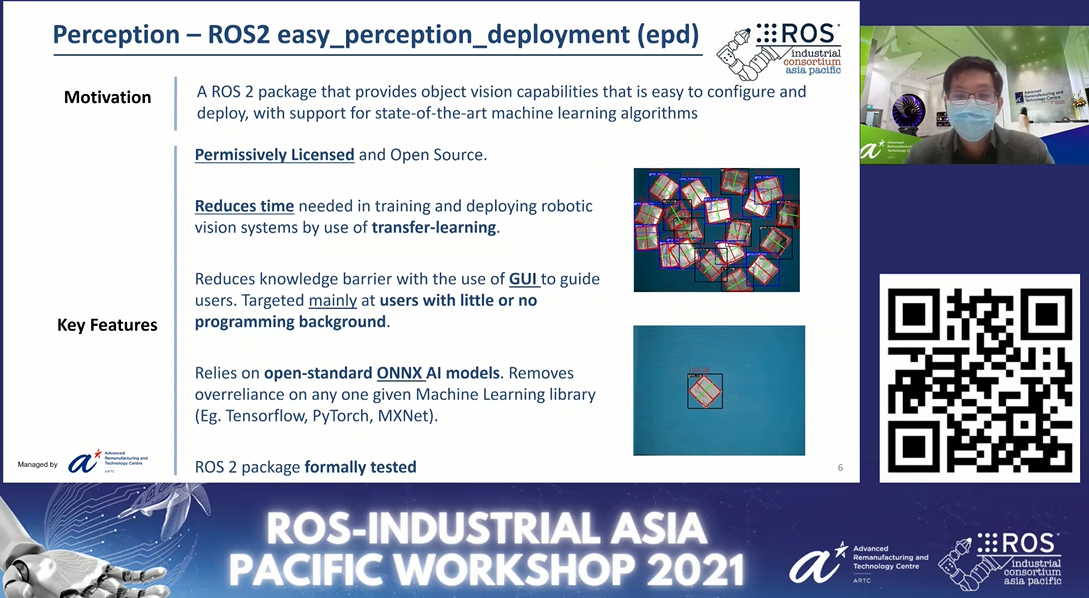

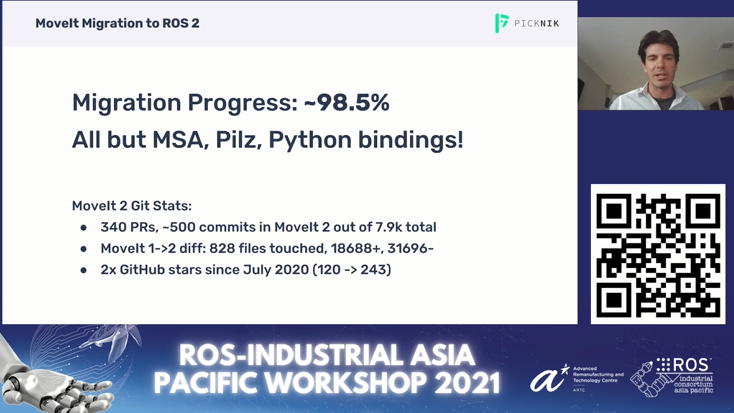

how ros-i extends ROS and ROS 2 to industrial relevant hardware and applications

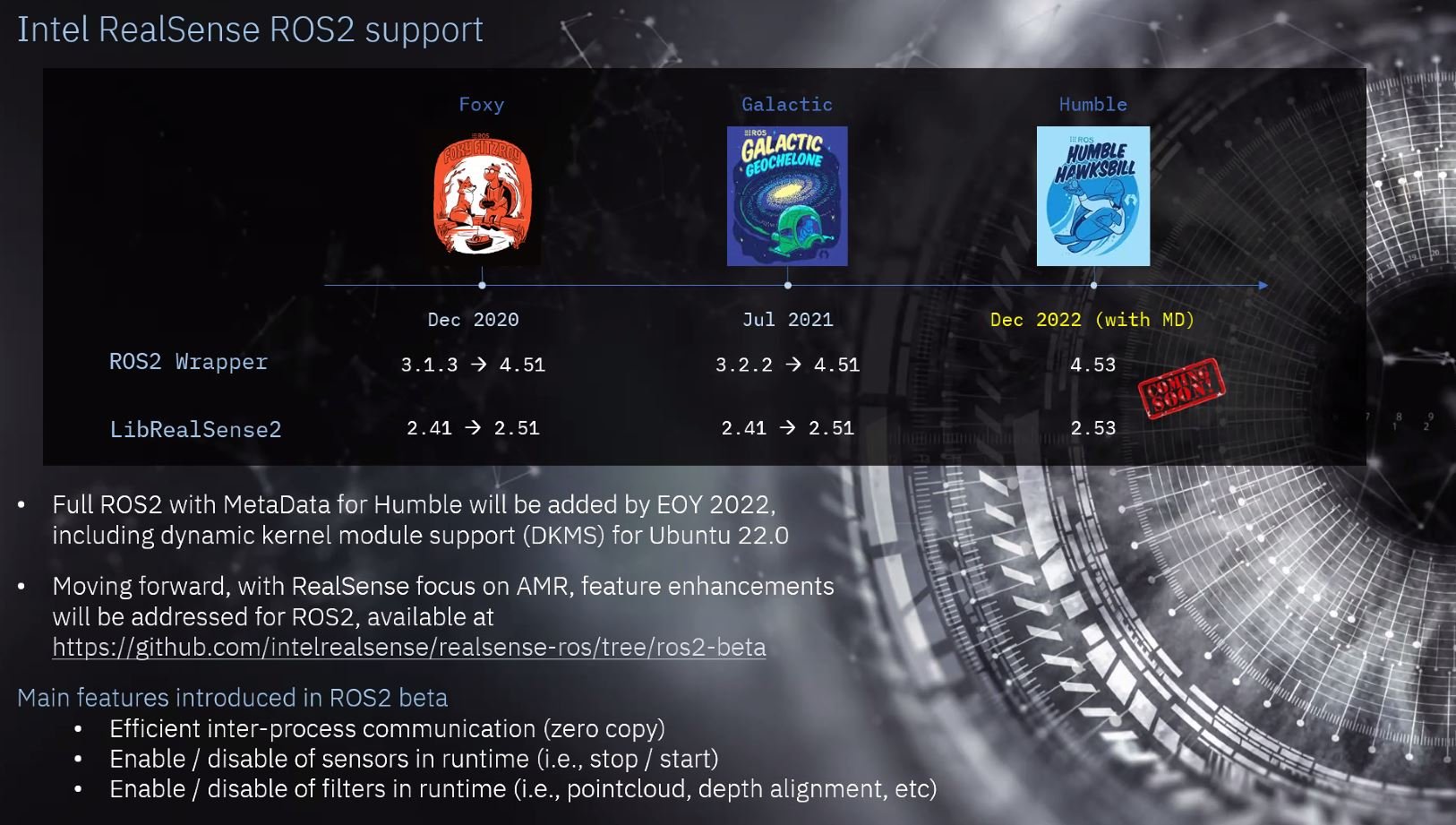

Southwest Research Institute (SwRI) and the ROS-I community more broadly often develop applications in ROS 2 , the successor to ROS 1. In many cases, particularly where legacy application code is utilized bridging back to ROS 1 is still very common, and one of the challenges in supporting adoption of ROS for industry. This post does not aim to explain ROS, or any of the journey to migrating to ROS 2 in detail, but if interested as a reference, I invite you to read the following blogs by my colleagues, and our partners at Open Robotics/Open Source Robotics Foundation.

- Robot Operating System 2: Design, architecture, and use in the wild link in this blog post: https://www.openrobotics.org/blog/2022/5/12/science-robotics-paper

- ROS-I and managing a world of ROS 1 to ROS 2 transition in industry:

- https://www.swri.org/industry/industrial-robotics-automation/blog/the-ros-1-vs-ros-2-transition

- Curious about the ROS-Industrial open source project as a whole?: https://ifr.org/post/from-academia-to-industry-and-beyond

Giving an Old Robot a New Purpose

Robots have been manufactured since the 1950’s, and, logically, over time there are newer versions with better properties and performance than their ancestors, and this is where the question comes in, how can you give capability to those older but still functional robots? This is becoming a more important question as the circular economy has gained momentum and understanding of the carbon footprint impact of the manufacture of robots that can be offset by reusing a functional robot. Each robot has its own capabilities and limitations and those must be taken into account. However across the ROS-I community this question of can I bring new life to this old robot always comes up, and this exact use case came up recently here at SwRI.

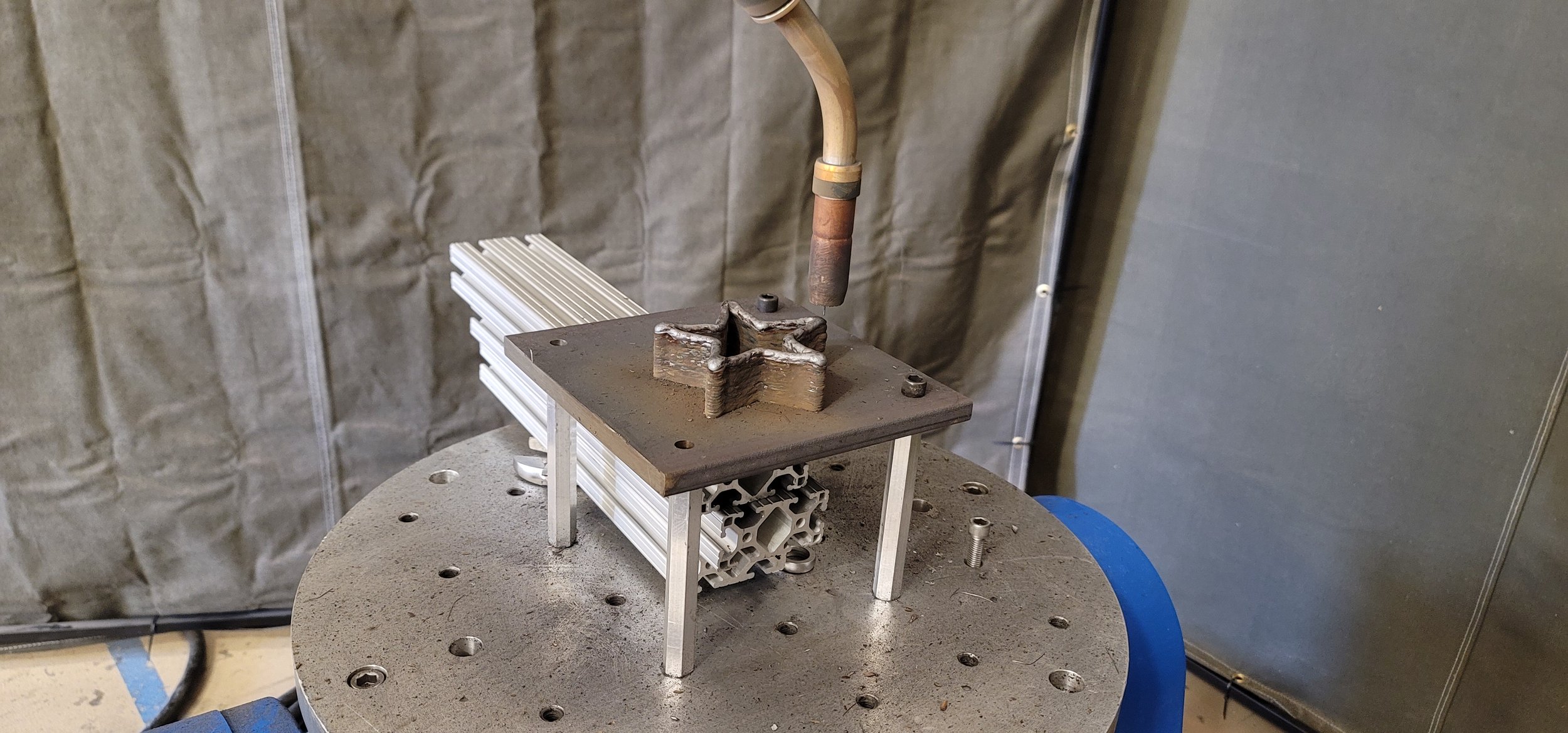

In the lab an older Fanuc robot was acquired it seemed a good candidate to set up a system that could demonstrate basic Scan-N-Plan capabilities in an easy to digest way with this robot that would be constantly available for testing and demonstrations. The particular system was a demo unite from a former integration company and included an inverted Fanuc Robot manufactured in 2008.

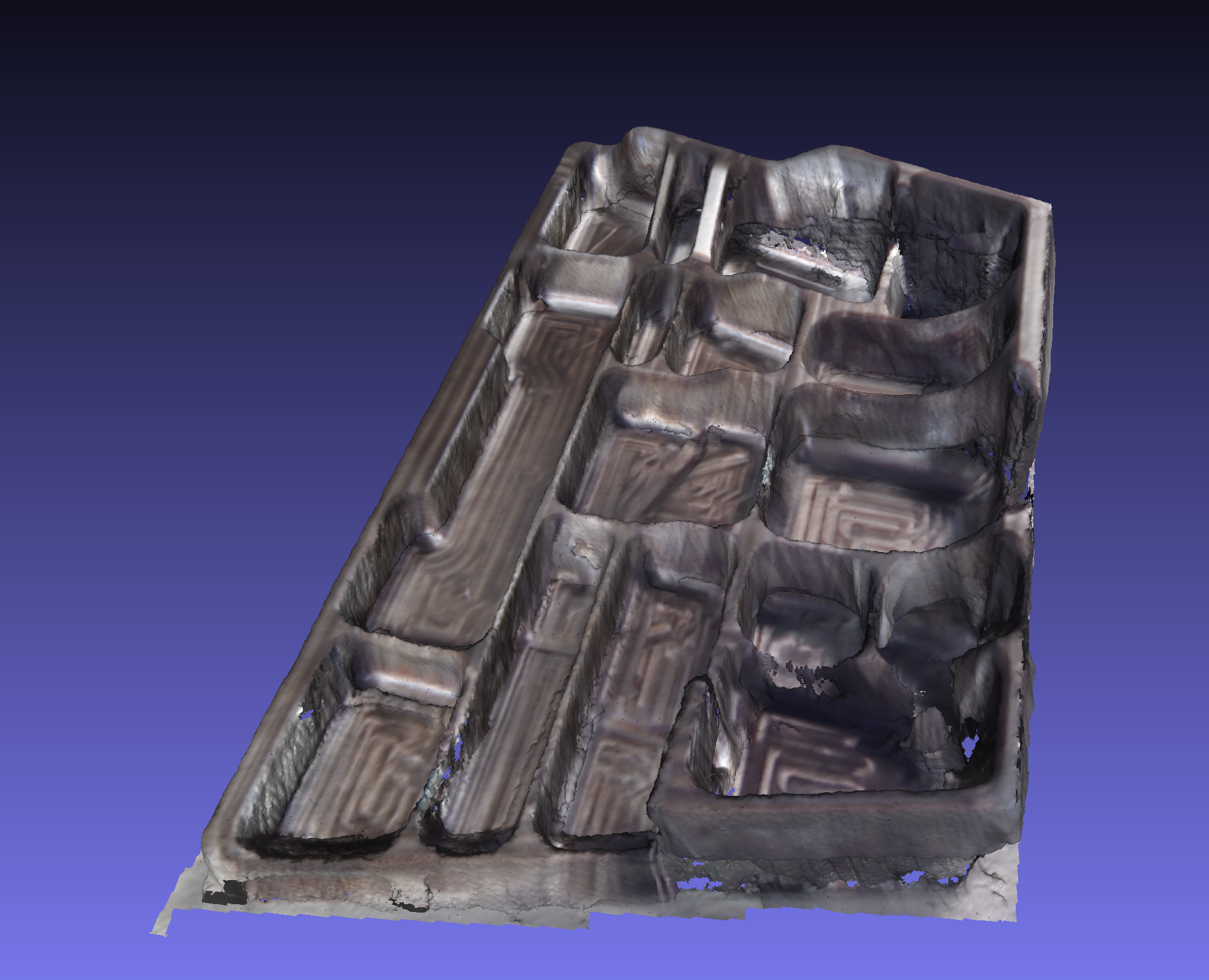

The demo envisioned for this system would be a basic Scan-N-Plan implementation that would locate and execute the cleaning of a mobile phone screen. Along the way, we encountered several obstacles which are described below.

Let's talk first about the drivers, 'a driver is a software component that lets the operating system and a device communicate with each other'. Each robot has its own drivers to properly communicate with whatever is going to instruct it on how to move. So when speaking of drivers, the handling of that is different from a computer's driver to a robot's driver, and this is because a computer’s driver can be updated faster and easier than that of a robot. When device manufacturers identify errors, they create a driver update that will correct them. In computers you will be notified if a new update is available, you can accept the update and the computer will start updating, but in the world of industrial robots, including the Fanuc in the lab here, you need to manually upload the driver, and the supporting software options to the robot controller. Once the driver software and options are installed a fair amount of testing is needed to understand what the changes you made to the robot have impacted elsewhere in the system. In certain situations may receive your robot with the required options needed to facilitate external system communication, however it is always advised to check and confirm functionality.

With the passing of time, the robot will not communicate as fast as newer versions of the same model, so to obtain the best results you will want to try to update your communication drivers, if available. The Fanuc robot comes with a controller that lets you operate it manually, via a teach pendant that is in the users hand at all times, or set it to automatically and it will do what it has instructed via a simple cycle start, but all safety systems need to be functional and in the proper state for the system to operate.

The rapid position report of the robot state is very important for the computer’s software (in this case our ROS application) to know where the robot is and if it is performing the instructions correctly. This position is commonly known as the robot pose. For robotic arms the information can be separated by joint states, and your laptop will probably have an issue with the old robot due to reporting these joint states at a slower speed while in auto mode that the ROS-based software on the computer expects. One way to solve this slow reporting is to update the drivers or by adding the correct configurations for your program to your robot’s controller, but that is not always possible or feasible.

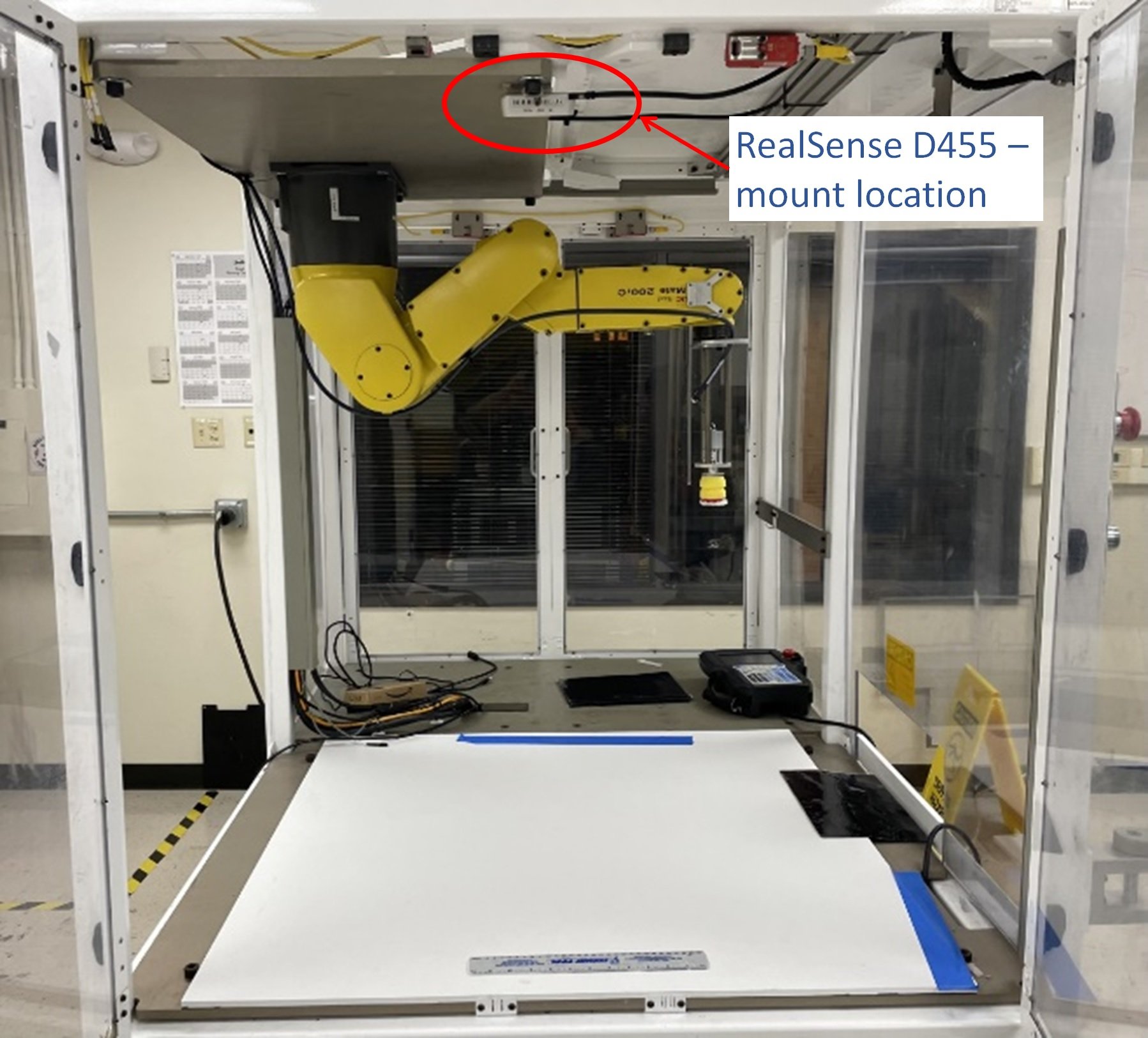

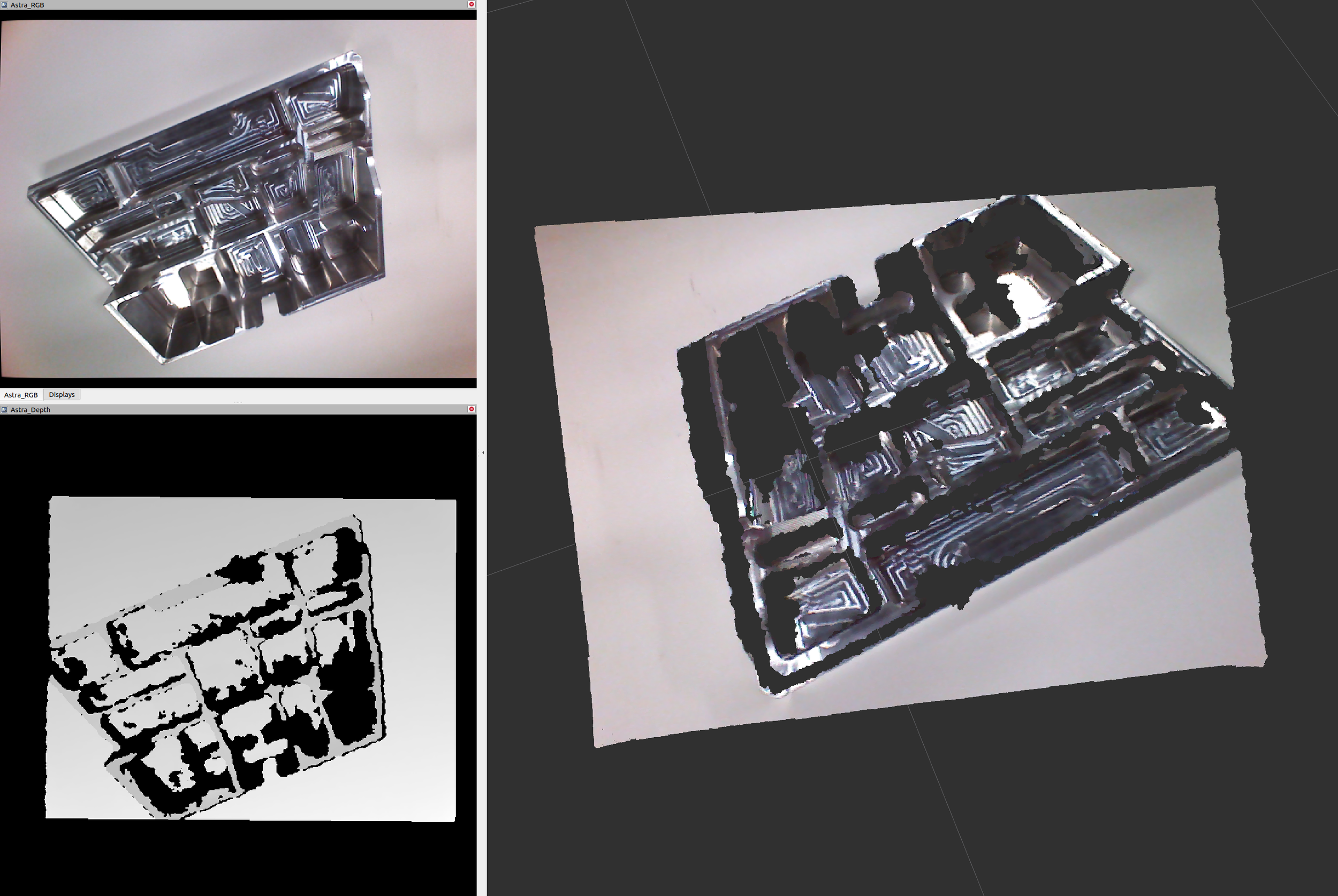

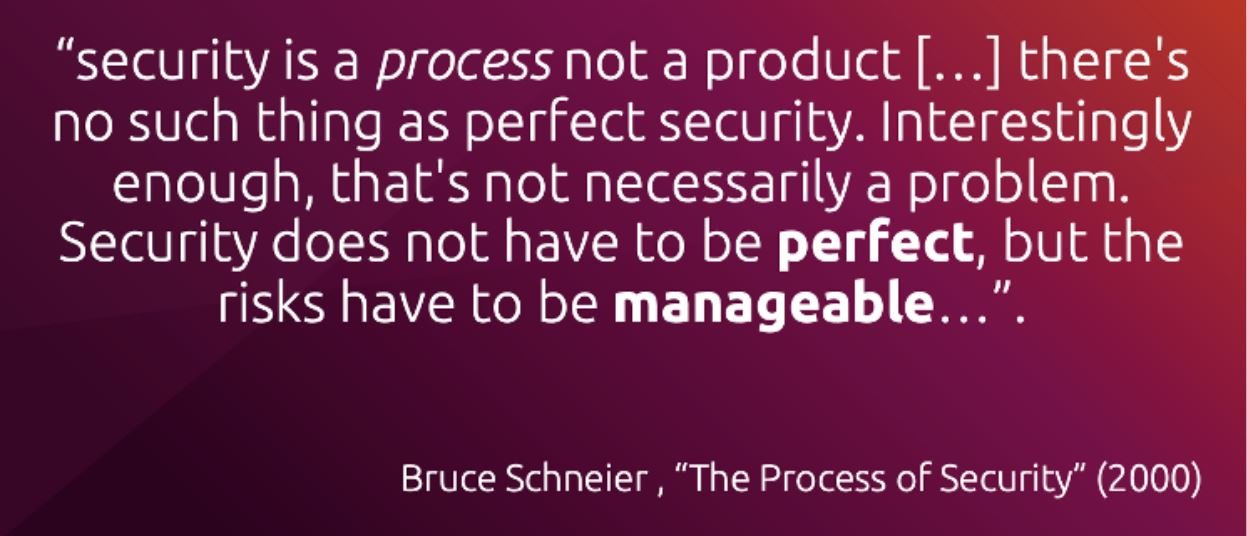

Updated location of the RGB-d camera in the fanuc cell

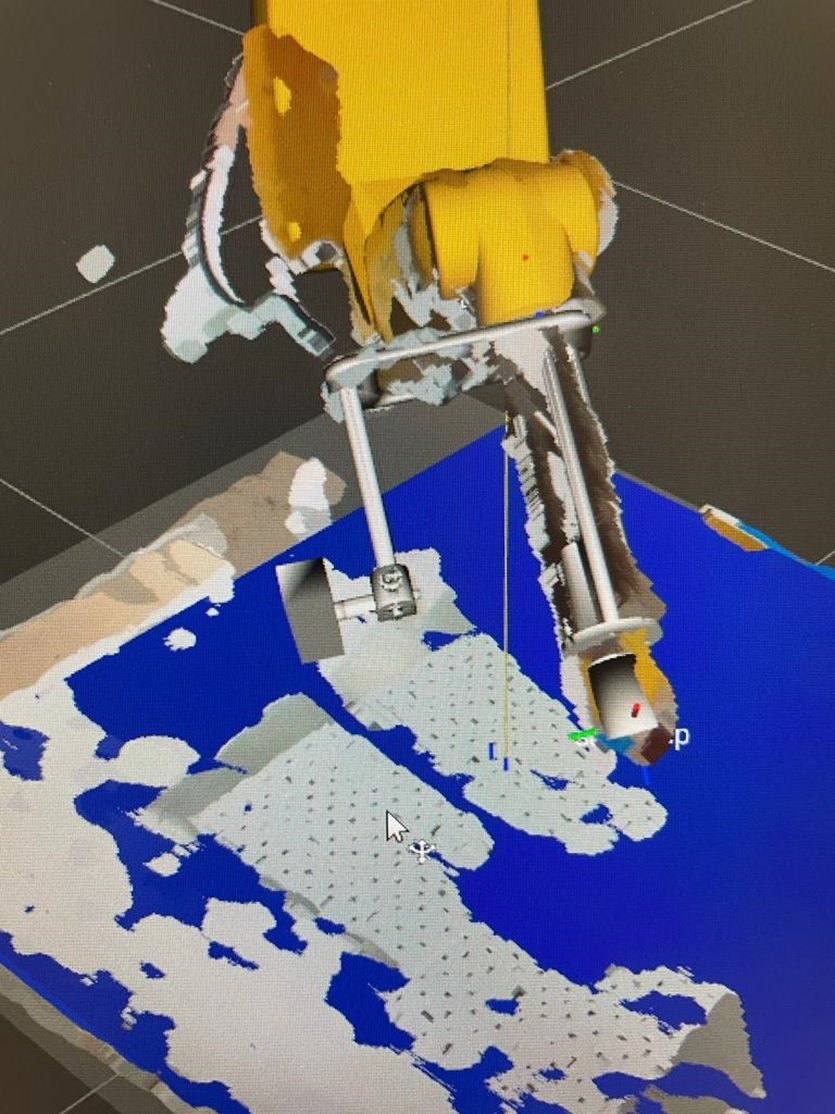

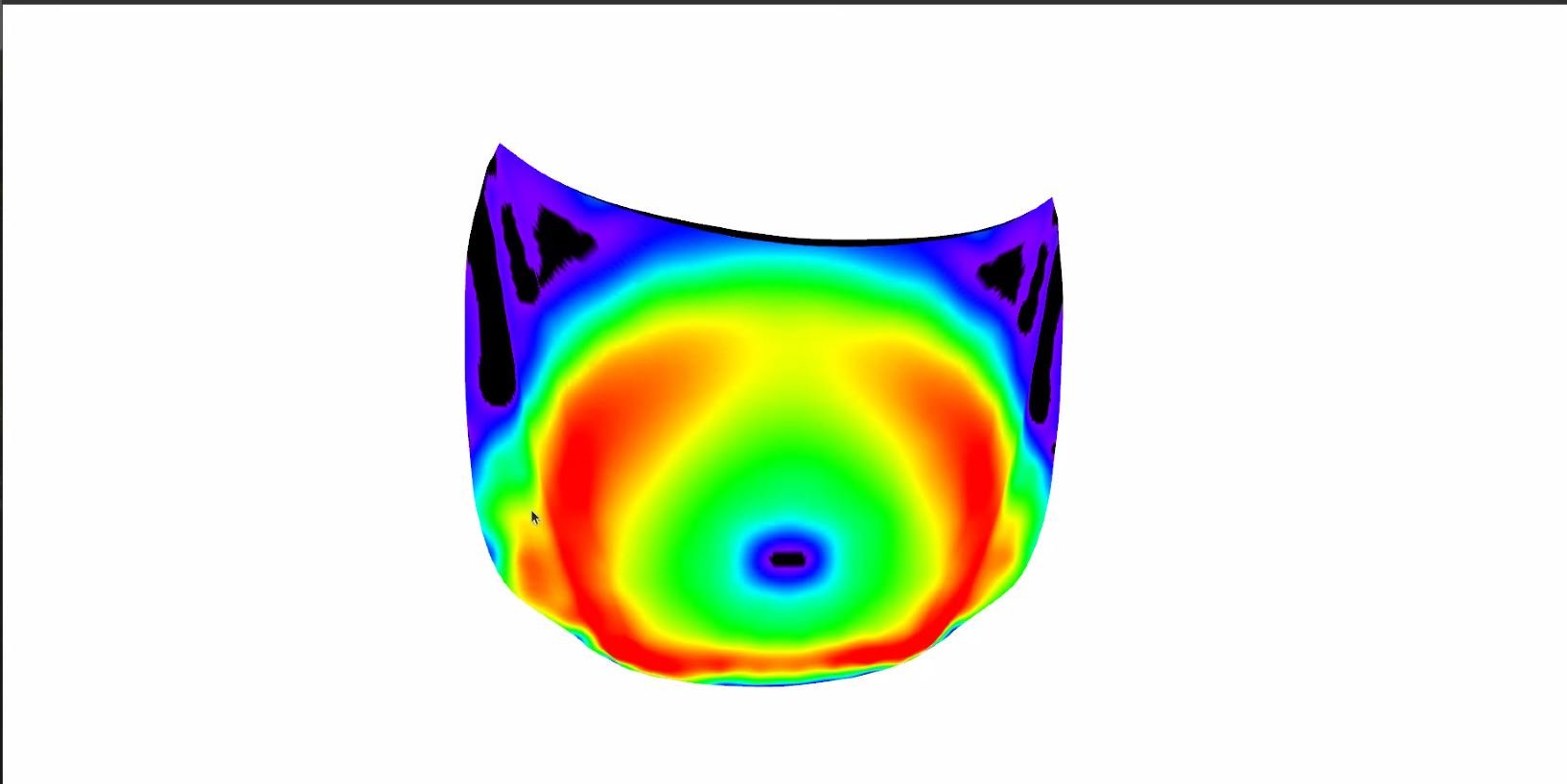

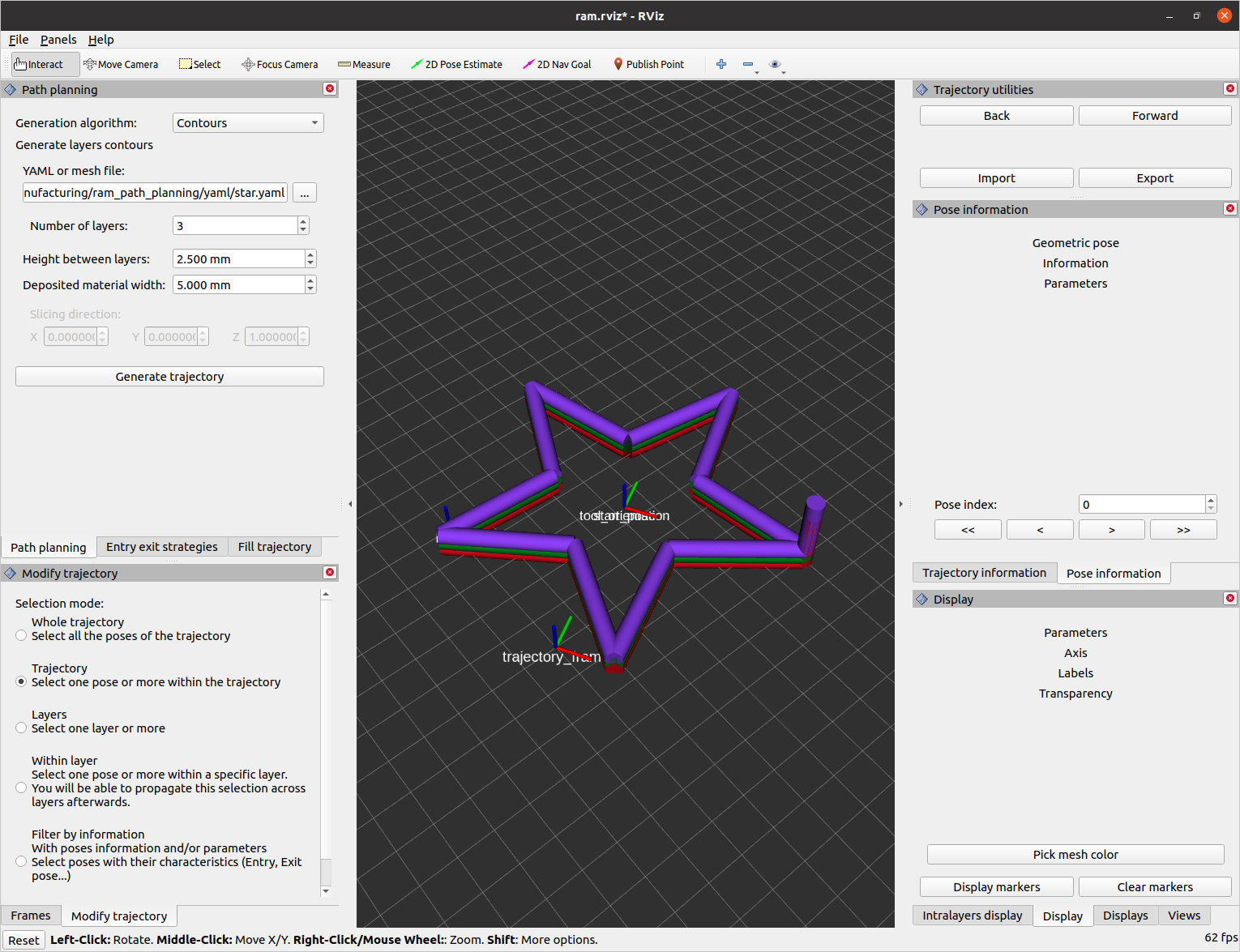

Another way to make the robot move as expected is to calibrate the robot with an RGB-D camera. In order to accomplish this, you must place the robot in a strategic position so that most of the robot is visible by the camera. Then view the projection of the camera and compare it to the URDF, which is a file that represents the model of the robot in simulation. Having both representations, in Rviz for example, you can change the origin of the camera_link, until you see that the projection is aligned with the URDF.

For the Scan n’ Plan application, the RGB-D camera was originally mounted on part of the robot's end-effector, but when we encountered this joint state delay, the camera was changed to a strategic position on the roof of the robot’s enclosure where it could view the base and the Fanuc robot for calibration to the simulation model as can be seen in the photos below. In addition, we set the robot to manual mode, where the user needed to hold the controller and tell the robot to start with the set of instructions given by the developed ROS-based Scan-N-Plan generated program.

CONFIRMING VIEWS OF CAMERA TO ROBOT CALIBRATION

Where we landed and what I learned

We were able to successfully give this old robot a new purpose and shared how to do it and how to solve some issues you could encounter. While note as easy as a project on “This Old House” you can teach an old robot new tricks. It is very important to know the control platform of your robot, it may be that a problem is not with your code but with the robot itself, so it is always good to make sure that the robot and the associated controller and software work well and then seek alternatives to enable that new functionality within the constraints of your available hardware. Though not always efficient in getting to the solution, older robots can deliver value when you systematically design the approach and work within the constraints of your hardware taking advantage of the tools available, in particular those in the ROS ecosystem.